Data Analytics and Engineering for Supply Chain

Yalantis provides end-to-end data engineering, building the foundational “single source of truth” your organization can rely on. We turn your fragmented, untrustworthy data into your most valuable and reliable strategic asset.

Challenges Data Analytics and Engineering Solves

-

Conflicting reports and no single source of truth

Instead of finance and operations debating whose freight cost report is correct, we build a single, governed data warehouse. This unifies your TMS, WMS, and ERP data, eliminating data chaos so you can identify the true root cause of problems.

-

Failed digital transformation and AI projects

Forget launching expensive AI or predictive analytics projects only to see them fail. We build the clean, reliable, and structured data foundation that is the non-negotiable prerequisite for “garbage in, gospel out” results from any strategic tech investment.

-

Operational errors from inaccurate inventory data

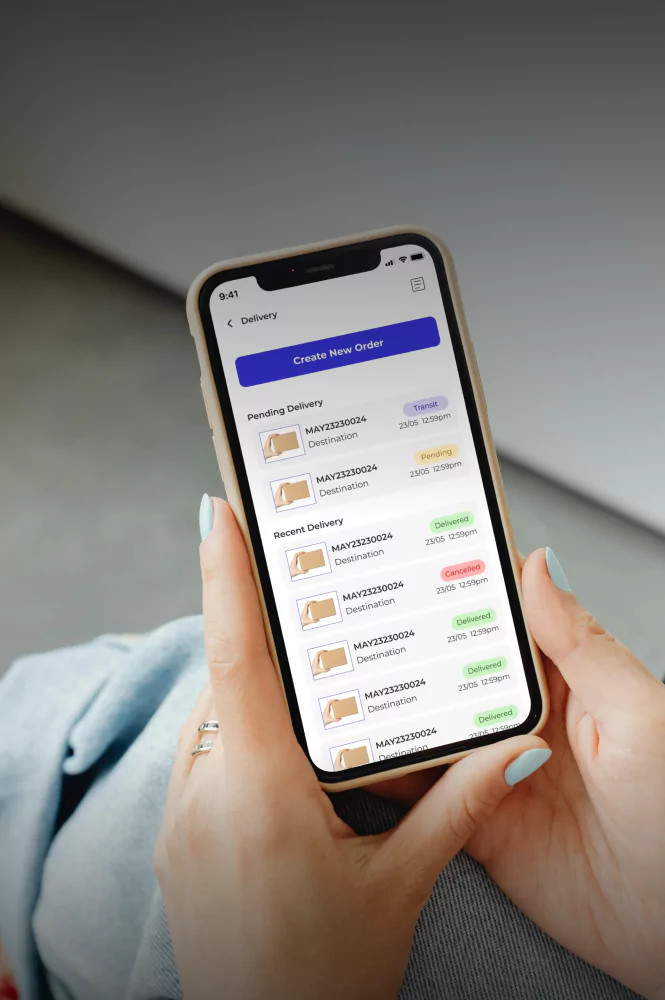

Instead of making costly mistakes like mis-picks, stockouts, or shipping to the wrong address, our solution provides a trusted, “golden record” for your core data. This ensures your physical operations are running on accurate, up-to-date information.

-

Compliance failures and audit risks

Forget the panic of manually pulling spreadsheets for a regulatory or financial audit. We build an auditable, verifiable data trail, allowing you to confidently produce a single, unalterable record of your operations (e.g., for GxP or IFTA) on demand.

-

Spiraling freight costs from untrusted data

Instead of paying inaccurate freight invoices or making poor routing decisions, we unify your carrier portals and TMS data. This gives you the trusted, granular insights needed to dispute penalties, optimize routes, and control logistics spend.

-

Critical decisions made on spreadsheets and gut-feel

You believe your “on-time delivery” KPI is failing, but you can’t prove why. We transform this guesswork into certainty by integrating all the relevant data, empowering you to stop managing by spreadsheet and start making data-driven decisions.

Our Data Analytics and Engineering Services

-

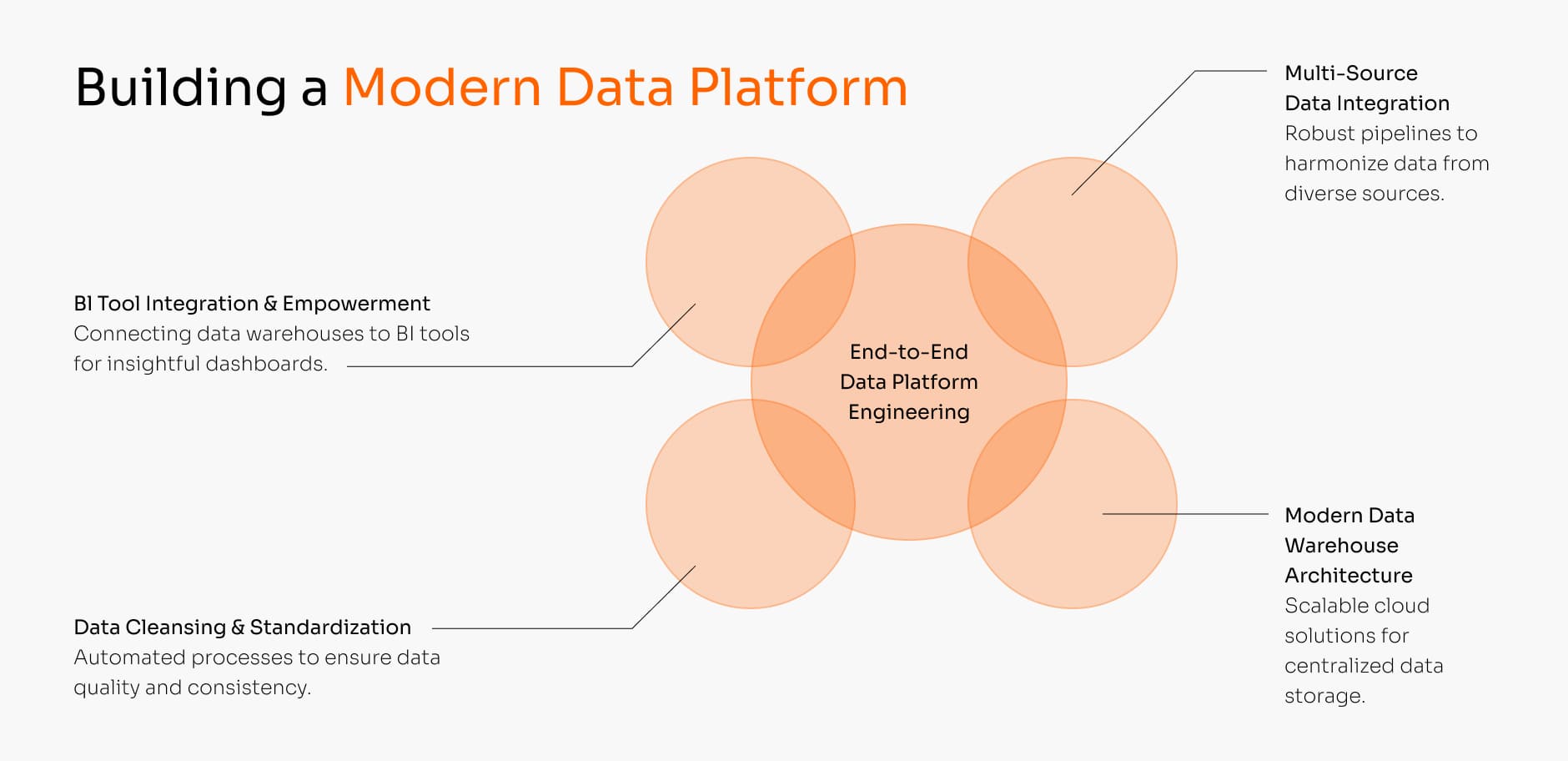

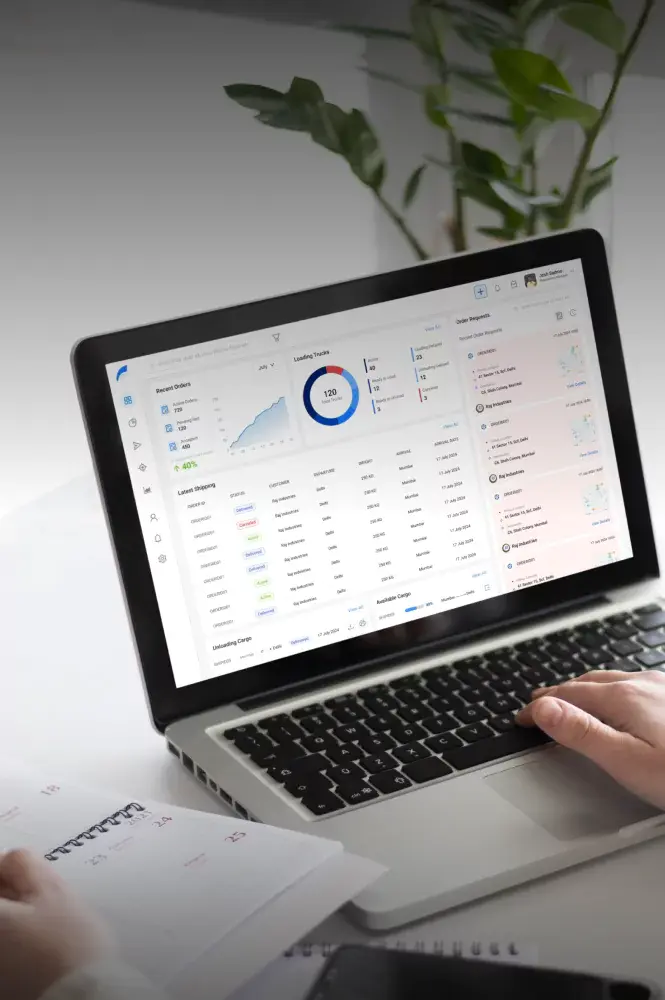

End-to-End Data Platform Engineering

A complete, managed service to design, build, and deploy your modern, centralized data platform. We architect the robust, scalable data warehouse and automated pipelines that serve as your organization’s single source of truth.

Key Features:

- Multi-Source Data Integration

Robust ETL/ELT pipeline development to extract, cleanse, and harmonize data from all your disparate sources, TMS, WMS, ERP, carrier portals, IoT sensors, and legacy systems. - Modern Data Warehouse Architecture

Design and implementation of a scalable cloud data warehouse (e.g., Snowflake, BigQuery, Redshift) to serve as the central hub for all your analytics. - Data Cleansing & Standardization

Automated processes to de-duplicate, standardize, and validate your data according to your business rules, ensuring all reports are built on clean, trustworthy information. - BI Tool Integration & Empowerment

Connecting your new data warehouse to your BI tools (Power BI, Tableau, Looker) and building the first set of critical, cross-functional dashboards that were previously impossible.

- Multi-Source Data Integration

-

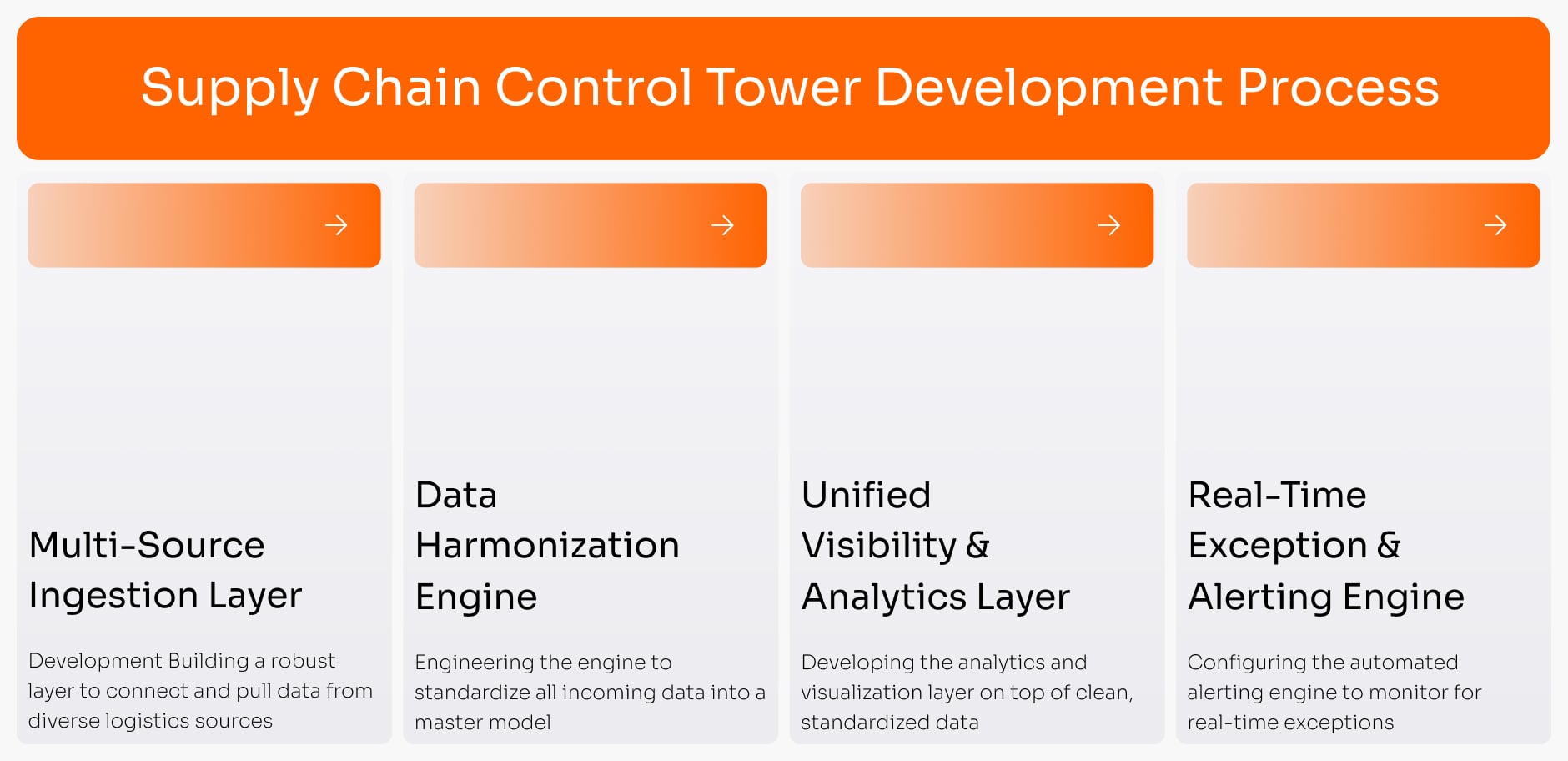

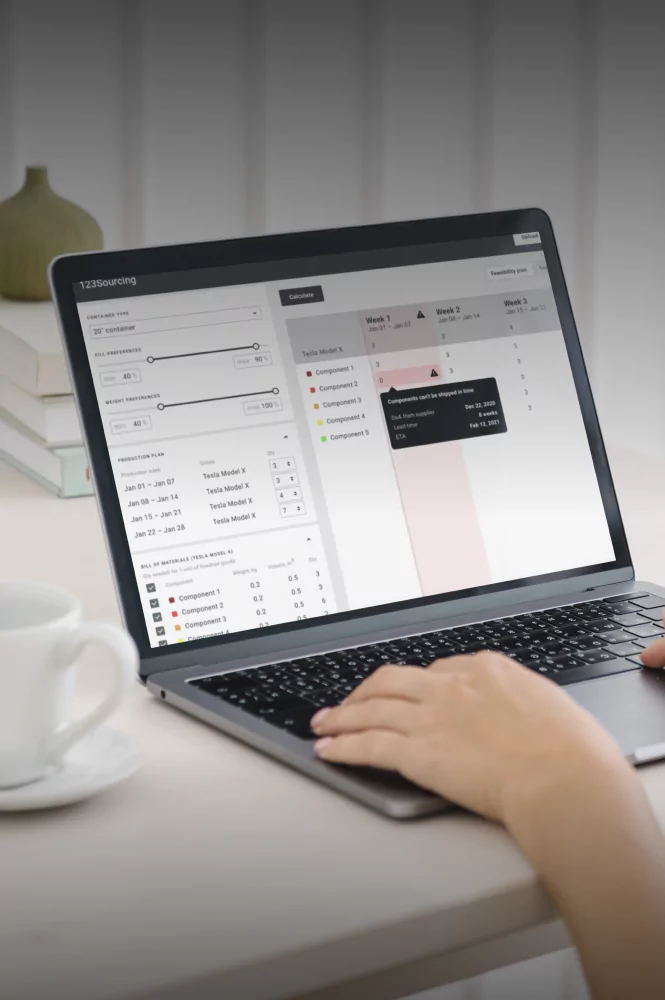

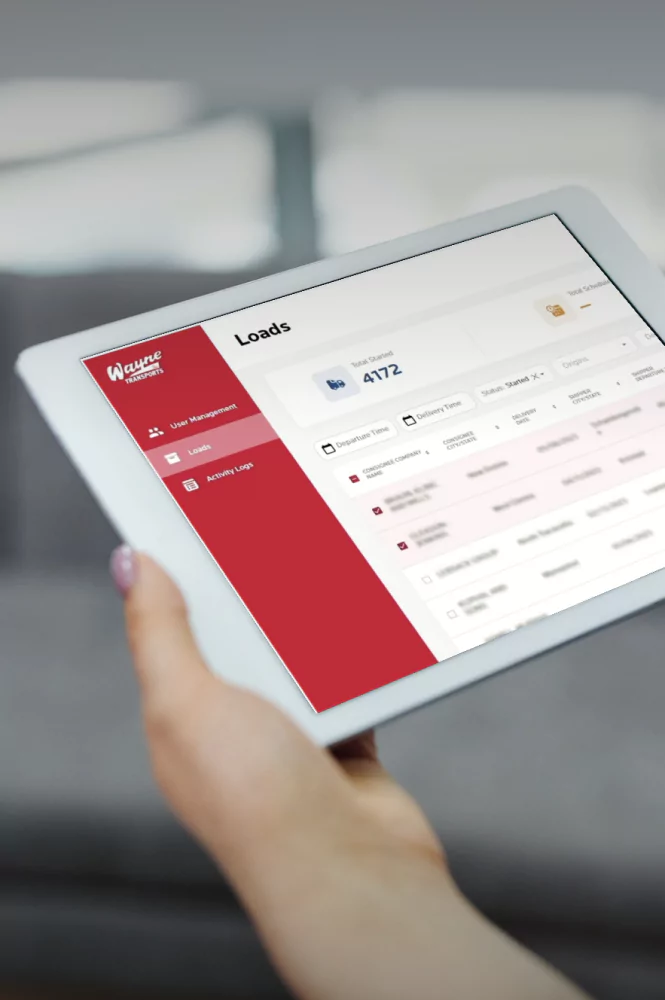

Supply Chain Control Tower Development

A complete service to build your Supply Chain Control Tower. We create a centralized data aggregation and analytics platform that provides end-to-end visibility over all your shipments, regardless of carrier.

Key Service Deliverables:

- Multi-Source Ingestion Layer Development

Building a robust ingestion layer to connect and pull data from diverse logistics sources, including carrier APIs, legacy EDI feeds, fleet telematics, IoT sensors, and automated file transfers. - Data Harmonization Engine

Engineering the harmonization engine to standardize all incoming data into your master model. We map disparate carrier statuses to a single, unified status code for your business. - Unified Visibility & Analytics Layer

Developing the analytics and visualization layer on top of clean, standardized data. This includes building unified “control tower” dashboards, KPI reports, and cross-carrier performance analyses. - Real-Time Exception & Alerting Engine

Configuring the automated alerting engine to monitor for real-time exceptions. Flag critical issues like temperature excursions, significant delays, or missed pickups.

- Multi-Source Ingestion Layer Development

-

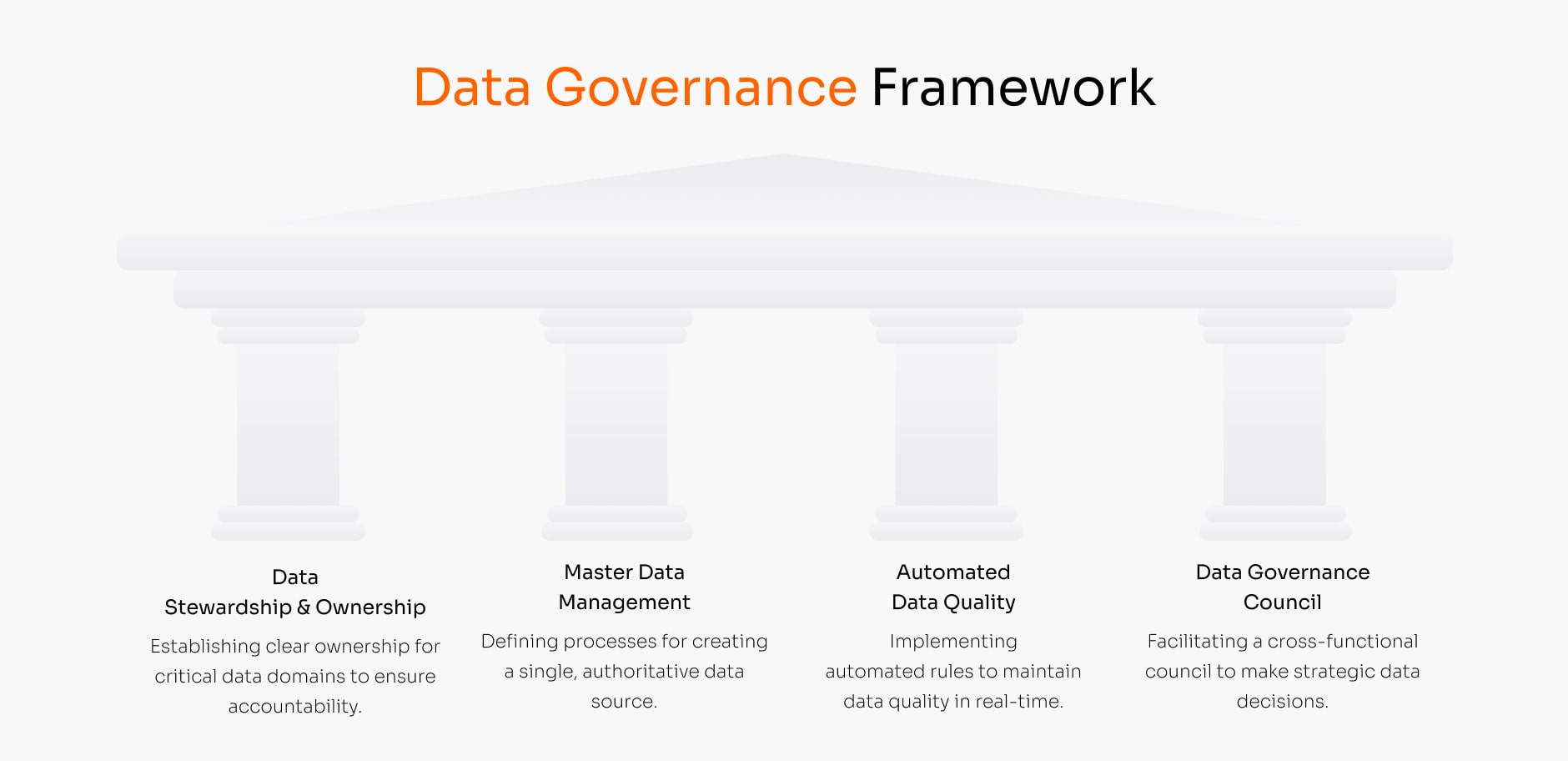

Data Governance Framework & Strategy

A strategic service to establish the rules, roles, and processes required to manage your data as an enterprise asset. We build the framework that ensures your data remains clean, secure, and trusted long-term.

Key Service Deliverables:

- Data Stewardship & Ownership Models

Identifying and establishing clear owners for each critical data domain (e.g., “customer,” “product,” “carrier”) to create clear lines of responsibility. - Master Data Management (MDM) Strategy

Defining the process and rules for creating the “golden record” for your core business entities, ensuring a single, authoritative definition for all systems. - Automated Data Quality Rules & Monitoring

Defining and embedding automated quality rules directly into your data pipelines to flag, quarantine, and remediate bad data before it pollutes your analytics. - Data Governance Council & Charter

Facilitating the creation of a cross-functional governance council with executive sponsorship to make decisions and break down political data silos.

- Data Stewardship & Ownership Models

-

Data Quality Assessment & Cleansing Service

A focused engagement to analyze the data within your critical systems, identify the root cause of quality issues, and perform a targeted, high-impact data cleanup.

Key Service Deliverables:

- Data Quality Profiling & Scoring

An automated analysis of your key data domains to identify and quantify common quality issues (e.g., duplicates, missing values, incorrect formats). - Root Cause Analysis of Data Errors

Investigating the systems and processes that are creating bad data to identify the source of the problem, not just the symptoms. - Targeted Data Cleansing & Deduplication

A one-time, high-impact project to cleanse, de-duplicate, and standardize a critical data set (like your customer or product master) to provide immediate business value. - Data Health Scorecard & Reporting

Delivering a comprehensive report that benchmarks your data quality, quantifies the scale of the problem, and provides a clear remediation roadmap.

- Data Quality Profiling & Scoring

-

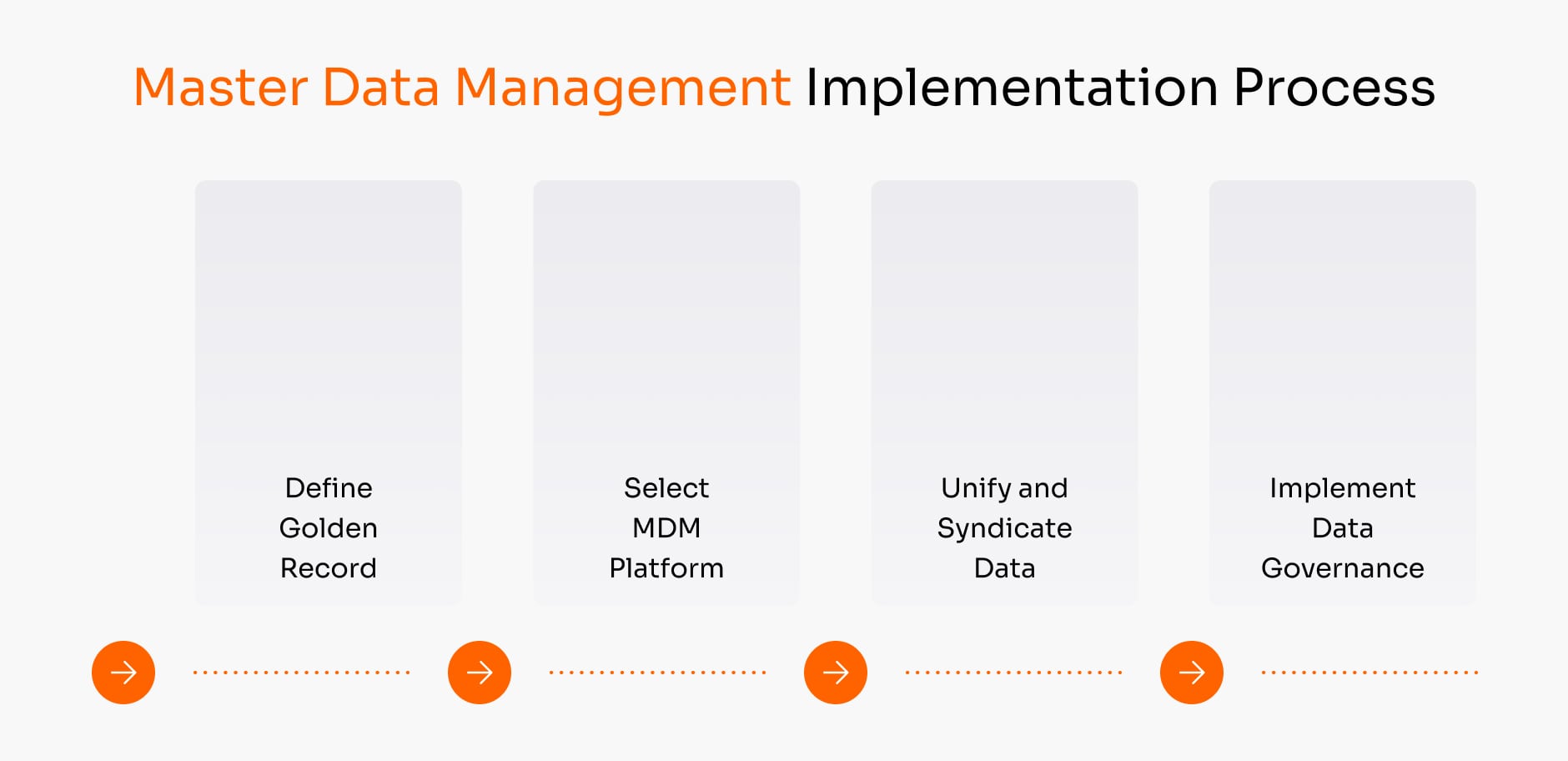

Master Data Management (MDM) Implementation

A specialized engineering service focused on implementing the technical solution to create, manage, and syndicate a single “golden record” for your most critical data.

Key Service Deliverables:

- “Golden Record” Definition & Modeling

A collaborative process to define the authoritative schema for a core entity, such as your global product master, location master, or customer master. - MDM Platform & Tool Selection

Providing expert, vendor-agnostic guidance on selecting and implementing the right MDM technology to fit your specific needs and budget. - Data Unification & Syndication

Building the pipelines to consolidate your master data from all source systems and, more importantly, syndicate the “golden record” back to them. - Data Governance Workflow Implementation

Embedding the MDM platform into your business processes with clear workflows for data stewards to approve, create, and manage master data.

- “Golden Record” Definition & Modeling

Case studies

Benefits of Our Data Engineering and Analytics Services

-

Enable Confident, Data-Driven Decision Making

Eliminate ambiguity by ensuring everyone, from the C-suite to the warehouse floor, is working from the same set of trusted, accurate numbers.

-

Reduce Operational Costs & Costly Errors

Prevent multi-million dollar mistakes caused by bad data, such as shipping to the wrong address, holding incorrect inventory, or paying inaccurate freight invoices.

-

Accelerate Digital Transformation & AI Adoption

Provide the clean, reliable, and well-structured data foundation that is the non-negotiable prerequisite for any successful AI or predictive analytics project.

-

Strengthen Regulatory Compliance & Audit Readiness

Dramatically reduce the time, cost, and risk of audits by maintaining a single, verifiable, and easily accessible record of your operations.

-

Eliminate Data Silos and Wasted Meetings

Stop wasting time in meetings debating whose numbers are right. We provide a single source of truth that allows you to spend your time making decisions, not arguing about data.

Testimonials from our clients

Predictive Maintenance ServicesInsights

BI and advanced analytics solutions for supply chain data analysis

Read a guide on BI and advanced analytics solutions for the supply chain. Learn how to implement each one and read about common tools and techniques for both.

Visualizing supply chain data with logistics dashboards

Learn about different supply chain analytics dashboards and supply chain visibility tools that can help you resolve issues that stop your company from growing.

How to use supply chain optimization technologies

Want to automate logistics processes? You’ll know how to identify your supply chain issues and fix them using transportation and logistics software.

FAQ

How is this different from just buying a BI tool like Tableau or Power BI?

A BI tool is just a visualization layer; it’s the “last mile.” If you feed it bad data, it will only give you pretty charts of the wrong answers. Our service is the foundational data engineering that ensures the data is accurate, unified, and trustworthy before it ever reaches your BI tool. We fix the “garbage in, garbage out” problem at the source.

What data sources do you typically integrate?

Our service is designed to work with your entire, fragmented data landscape. We are experts at integrating data from core supply chain systems, including your TMS, WMS, ERP (like SAP or Oracle), MES, carrier portals, ELD/telematics systems, IoT sensors, and even legacy AS/400 systems or spreadsheets.

How do you ensure data stays clean after the project is done?

A one-time cleanup is only a temporary fix. Our solution is built for the long term. A core part of our service is implementing the Data Governance Framework and embedding automated data quality monitoring directly into the data pipelines. This ensures that new data quality issues are flagged and remediated in real-time, keeping your data warehouse clean and trustworthy.

Can we start with a pilot project?

Absolutely. In fact, we recommend it. We offer a fixed-scope “Data Maturity Assessment” to give you a strategic roadmap. Or, we can do a “Foundational Data Pilot” where we build an end-to-end solution for a single, high-impact domain (like “On-Time Delivery”) to prove the value and process before a full-scale deployment.

What technology do you use for the data warehouse?

We are technology-agnostic and select the best tools for your specific needs. Our expertise is in modern, scalable cloud data platforms like Snowflake, Google BigQuery, and Amazon Redshift, as well as the robust ETL/ELT tools (like Fivetran, dbt, or custom-built) required to feed them.

Contact us

got it!

Keep an eye on your inbox. We’ll be in touch shortly

Meanwhile, you can explore our hottest case studies and read

client feedback on Clutch.

Nick Orlov

IoT advisor

How to get started with IoT development

-

Get on a call with our Internet of Things product design experts.

-

Tell us about your current challenges and ideas.

-

We’ll prepare a detailed estimate and a business offer.

-

If everything works for you, we start achieving your goals!