Choosing a tech stack for the full-cycle web application development in 2023

One of the most important steps in developing a successful digital software product is picking the right tech stack. Why? Because creating a product is not just about designing a nice user interface (UI) and a convenient user experience (UX); it’s also about designing a stable, secure, and maintainable product that will not only win your customer’s heart but will allow you to scale your business. Here’s where the right technology may help.

While you, as a business owner, are busy with things like elaborating your business idea, defining your product’s pricing model, and coming up with powerful marketing, deciding on technologies for your new app is something you’ll likely leave up to your developers.

Of course, it’s common practice to rely on your development partner’s technology suggestions. If you do, however, you should make sure your partner understands your business needs and takes into account all the important features you’ll be implementing for choosing a technology stack.

At Yalantis, we believe that having a general understanding of the web development stack is a must for a client. It helps us to speak the same technical language and effectively reach your goals. This is why we’ve prepared this article.

Without further ado, let’s explore what a technology stack is and what tools we use at Yalantis to build your web products.

Defining the structure of your web project

You might have guessed that a technology stack is a combination of software tools and programming languages that are used to bring your web or mobile app to life. Roughly speaking, web and mobile apps consist of a frontend and backend, which are the client-facing application and a hidden part that’s on the server, respectively.

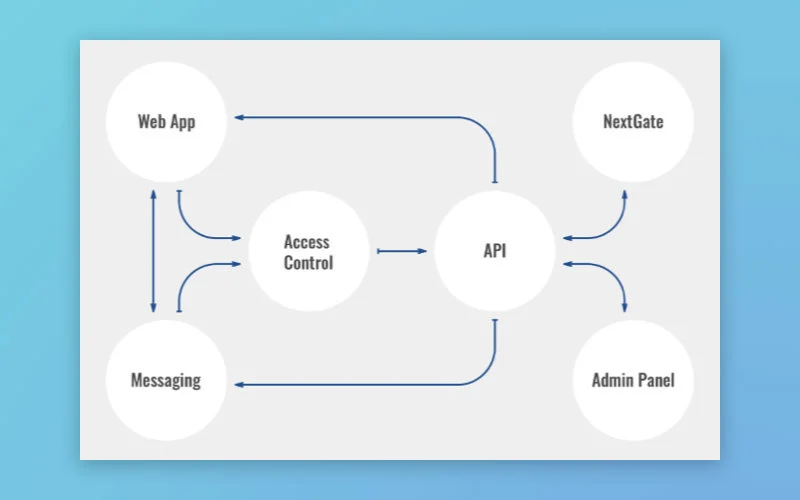

Each layer of the app is built atop the one below, forming a stack. This makes web stack technologies heavily dependent on each other. The image above shows the main building blocks of a typical technology stack; however, there may be other supporting elements involved. Let’s view the standard elements of frontend and backend development in detail.

Frontend

The front end is also known as the client side, as users see and interact with this part of an app. For a web app, this interaction is carried out in a web browser and is possible thanks to a number of programming tools. Client-facing web apps are usually built using a combination of JavaScript, HTML, and CSS. We’ll explain the components of the frontend technology stack below.

Tools we use for frontend web development

HTML (Hypertext Markup Language) is a programming language used for describing the structure of information presented on a web page. Yalantis uses the latest version of HTML — HTML5 — which has new elements and attributes for creating web apps more easily and effectively. The main advantage of HTML5 is that it has audio and video support, which wasn’t included in previous HTML versions.

CSS (Cascading Style Sheets) is a style sheet language that describes the look and formatting of a document written in HTML. CSS is used for annotating text and embed tags in styled electronic documents.

At Yalantis, we use CSS3 (the latest working version of CSS) along with HTML5. Unlike earlier versions of CSS, CSS3 supports responsive design, allowing website elements to respond differently when viewed on devices of different sizes. CSS3 is also split into lots of individual modules, both enhancing its functionality and making it simpler to work with. In addition, animations and 3D transformations work better in CSS3.

JavaScript (or simply JS) is the third main technology for building the frontend of a web app. JavaScript is commonly used for creating dynamic and interactive web pages. In other words, it enables simple and complex web animations, which greatly contribute to a positive user experience. Check out our article on using web animations to create user-friendly apps for more on this topic. JavaScript is also actively used in many non-browser environments, including on web servers and in databases.

TypeScript is a JavaScript superset that we often include in our frontend toolkit. TypeScript enables both a dynamic approach to programming and proper code structuring thanks to the use of type checking. This makes it a perfect fit for developing complex, multi-tier projects.

Frontend frameworks

Frontend frameworks are packages with prewritten, standardized code structured in files and folders. They provide developers with a foundation of pretested, functional code to build on along with the ability to change the final design. Frameworks help developers save time, as they don’t need to write every single line of code from scratch. Yalantis often chooses React for frontend web development, as this JavaScript library is perfect for building user interfaces. We also have mastered Angular and use it if a client prefers.

Backend

Even though the backend performs offstage and is not visible to users, it’s the engine that drives your app and implements its logic. The web server, which is part of the backend, accepts requests from a browser, processes these requests according to a certain logic, turns to the database if needed, and sends back the relevant content. The backend consists of a database, a server app, and the server itself. Let’s look at each component of the backend technology stack in detail.

Tools we use for backend web development

Running on the server, the server app listens for requests, retrieves information from the database, and sends responses. Server apps can be written in different server-side languages depending on the project’s complexity. Yalantis uses such server-side programming languages as Golang, Rust, Ruby, and Node.js. These languages are versatile and boast a list of indisputable benefits.

Golang is a statically typed programming language that allows for efficient code maintainability and management with a built-in package manager. The Go language is compiled and uses garbage collection to prevent memory leaks, ensuring a safe development process.

Rust is also a statically typed language that takes the best from common rules of other statically typed languages such as Java and C++ and significantly improves those rules. Rust ensures efficient memory management without garbage collection or virtual machines (VMs), high speed and performance as well as helps to write relatively bug-free code. This programming language can be used in the development of large distributed systems, web services, IoT networks, and embedded systems (embedded system software development).

Ruby is an object-oriented programming language that provides good support for data validation, libraries for authentication and user management, and more. This language is easy to learn, flexible, and composable, meaning its parts can be combined and recombined in different variations. Ruby allows for quick web development with the help of the Ruby on Rails framework.

Node.js is a JavaScript runtime environment. Node.js is commonly applied to the backend and full-cycle development. It has many ready-made solutions for nearly all development challenges, reducing the time for developing custom web applications. Read our detailed comparison of Node.js and Golang to familiarize yourself with the differences between them in terms of scalability, performance, error handling, and other criteria.

Web frameworks

Web frameworks greatly simplify backend development, and which you should choose depends on the programming languages you’ve picked. Any programming language has at least one universal framework. Libraries for a framework provide reusable bundles written in the language of the framework: for instance, code for a drop-down menu.

However, frameworks aren’t just about the code: they’re completely layered workflow environments. Yalantis uses Ruby on Rails as a Ruby framework and Gorilla as a Golang framework. Both ensure clean syntax, rapid development, and stability.

Databases

A database is an organized collection of information. Databases typically include aggregations of data records or files. For example, in e-commerce development, these records or files will be sales transactions, product catalogs and inventories, and customer profiles.

There are many types of databases. In this article, we only touch upon databases Yalantis works with to outline some use cases for particular databases. Our specialists work with the following databases and choose which to use depending on the particularities of a client’s project.

PostgreSQL. This database is especially suitable for financial software development, manufacturing, research, and scientific projects, as PostgreSQL has excellent analytical capabilities and boasts a powerful SQL engine, which makes processing large amounts of data easy and smooth.

MySQL. Especially designed for web development, MySQL provides high performance and scalability. This database is the best fit for apps that rely heavily on multi-row transactions, such as a typical banking app. Generally speaking, MySQL is still a great choice for a wide range of apps.

MongoDB. This database boasts numerous capabilities, such as a document-based data model. MongoDB is a great choice when it comes to calculating distances and figuring out geospatial information about customers, as this database has specific geospatial features. It’s also good as part of a technology stack for e-commerce, event, and gaming apps.

Redis. Redis provides sub-millisecond response times, allowing millions of requests a second. This high speed is essential for real-time apps, including for advertising, healthcare, and IoT.

Elasticsearch. This document-based data storage and retrieval tool is tailored to storing and rapidly retrieving information. Elasticsearch should be used for facilitating the user experience with quicker search results.

Application Programming Interfaces

An Application Programming Interface (API) provides a connection between the server and the client. APIs also help a server pull data from and transfer data to a database.

Numerous services we use daily rely on a huge number of interconnected APIs. If even one of them fails, the service will not function. In order to avoid this, APIs should be thoroughly tested.

Server architecture

A developer needs a place to lay out written code from the early days of development. For this, we use a server setup.

There are many variations of server architectures, including those in which the entire environment resides on a single server and those with a database management system (DBMS) separated from the rest of the environment. Your choice of server architecture should depend on such factors as performance, scalability, availability, reliability, cost, and ease of management.

DevOps solutions for server setup

Yalantis provides DevOps services for companies that run apps in the cloud to ensure the speed of development and operations. We use the services of cloud providers to host web applications. AWS is our priority for hosting web projects due to its flexibility, reliability, and security. As an alternative to AWS, we also use Google Cloud Platform Services, Microsoft Azure, and Heroku.

A cloud provider gives us a server to use, and then our DevOps specialist:

- sets up the environment — all additional software — to ensure smooth operation of the app. Our DevOps specialists typically use Nginx, which is a powerful web server. Nginx allows for setting up reverse proxies, load balancing, and more.

- sets up a continuous integration/continuous deployment (CI/CD) pipeline from scratch. Continuous integration makes possible continuous development, adding code, and synchronous testing. Continuous deployment is responsible for delivering code to the server.

We use such tools as GitLab and GitLab CI. GitLab is a single app for the whole DevOps lifecycle in which all written code is stored. We also use the GitLab CI (Continuous Integration) service, which creates and tests software whenever a developer pushes code to the repository.

Having studied the basics of the technology stack, let’s move on to criteria that will help you and your development team select the most appropriate technologies for your project.

Criteria that impact the choice of technology stack

Keep in mind that the type of app you’re developing influences the technology you should select. A medical app, for example, will require high security, while audio/video streaming and file-sharing apps will need programming languages and frameworks such as Rust that can handle high loads.

When deciding on your project’s technology stack, you should analyze your web app based on the criteria mentioned below to narrow down the options. Keep in mind that web development technologies can be used in different combinations, and frameworks usually are chosen after the programming language has been agreed on.

However, sometimes the choice of the framework can impact the choice of language. For example, if you choose the Strapi open-source framework for developing a content management system (CMS) because it covers all critical CMS features, then it would be beneficial to use Node.js for the back end, as Strapi is built on Node.js.

Before finalizing the tech stack for your project, you’ll need to share with your development team your business goals, all business requirements, as well as project constraints. Further, we dive into different project requirements and limitations.

Functional requirements

Functional requirements describe features that your web application should have. We need to differentiate among functional requirements that are the most impactful in terms of the software architecture and technologies, as there is a direct correlation between the software architecture and the technology stack. For instance, you may need your application to integrate with certain third-party services. To ensure it can, it’s important to maintain architectural flexibility in case any of these services change over time and it’s necessary to connect with a new service. For such an architecture, we’ll need to pick the technologies that fit the best.

Functional requirements also help define the project’s complexity, which affects the choice of technology stack. As the size of a project grows, the complexity of the project usually increases too, as shown in the diagram below:

Small projects. Single-page sites, portfolios, presentations, digital magazines, and other small web solutions can be implemented with the help of design tools like Readymag and Webflow. Ready-made solutions can be a better choice for small sites in terms of quick and cost-efficient development as well as simple site management for non-technical specialists.

Medium-sized projects. Online stores, financial platforms, and enterprise apps require a more complex technology stack with several layers and a combination of languages, as these apps have more features and are developed with the help of frameworks.

Large projects. Social networks and marketplaces are considered large projects and may require much more scalability, speed, and serviceability, which, in turn, require a versatile and well-suited tech stack.

Nevertheless, deciding on a technology stack for a project of any size requires consideration of both functional and non-functional requirements.

Non-functional requirements

Non-functional requirements are also called quality attributes, and they resemble your expectations from your application, such as scalability, availability, a high level of security, high performance, or expanding to new markets. In particular, expanding to other countries may require choosing a tech stack that can ensure the same application functionality across regions (e.g. taking into account the availability of cloud providers).

To ensure the high performance of your application yet save money, you may build the core of your application on a super-fast programming language like Rust and build the rest of the application in other, simpler programming languages. The Rust language is well-suited for developing highly performant software components with a low memory footprint.

Quick time to market is also a non-functional requirement. If quick time to market is critical for you, we recommend using ready-made solutions that help to minimize the development and release time. For example, the Ruby on Rails framework, which provides access to a set of basic solutions, will save significant time. For Java, the Spring framework has lots of out-of-the-box solutions. Sticking to a popular technology will also save time in seeking out developers. And to top it all off, well-documented technologies facilitate the development of some features.

If you expect your application to scale easily, that will also impact the technologies used. You can scale either vertically, adding additional resources for new tasks, or horizontally, adding processing units or physical machines to your cluster or database replica. Such languages as React, Rust, Node.js, Golang, and Ruby on Rails have great potential to ensure the scalability of your application. Your app will also scale well on AWS, as it uses advanced ethernet networking technology designed for scaling along with high availability and security.

Your app may require high security. For instance, if you’re developing a health app, you should choose technologies that provide the highest level of security, especially if you operate with sensitive patient health information (PHI). Ruby on Rails, however, will be a good choice, as it provides DSL (Domain-Specific Language), helping you configure a content security policy for your app. Rust also adds to your application’s security by allowing for the development of practically bug-free software with a low chance of memory leaks.

To provide security for the medical web app Healthfully, our specialists ensured the following:

- All interactions with the app are carried out using an API.

- Access to the API is token-based, with a time limit on token validity.

- Access is granted for each specific request.

- All infrastructure is in AWS, and access from the internet is possible only via the API; all other communication is behind a firewall.

- Backups are performed regularly.

- HIPAA compliance is ensured by securely sharing HL7 messages, limiting the visibility of sensitive data, and regulating the number of devices simultaneously logged into one account.

Constraints

Project constraints are indisputable and critical restrictions on the project. One of the most common constraints is the necessity for your application to comply with domain-specific or local laws and regulations such as Payment Card Industry Data Security Standard (PCI DSS) for mobile banking app development or the abovementioned HIPAA for US healthcare applications. If you work with an outsourcing development team, another project constraint could be the need to work with your in-house team to determine a common approach to the project. Constraints can’t be ignored or omitted. And as a rule, they can slow down the software development lifecycle.

Choosing the technologies that best fit your web project isn’t easy, but there is a way for you to save time and effort. Find out what it is in the next section.

Why is it best to work with a solution architect?

As you can see, you need a substantial approach to choosing your project’s technology stack including a thorough examination of your goals and business requirements. Already at the initial project stage when you’re partnering with an IT consultancy company, you should start working with a competent solution architect to lay the foundation for your future project that will drive your choice of technology stack.

A solution architect has all the necessary business domain and technical knowledge to help you decide on the right direction for your project.

Benefits of a solution architect for your project:

- Ready architecture simplifies the choice of technology stack. A solution architect first develops an architecture, based on which it will be much easier to choose relevant technologies. Choosing technologies before designing an architecture can significantly slow down the development process.

- Minimize mistakes from the very beginning. This results in future savings of time and resources that could be necessary for re-engineering the application.

- Domain-specific knowledge allows your architect to define all project constraints you should take into account before deciding on a suitable technology set. Plus, your solution architect can tell whether your business requirements match your domain requirements.

- Holistic understanding of technologies. Solution architects usually have broad knowledge about existing and emerging technologies and lots of ready-made solutions that can make projects in particular domains more time- and cost-efficient.

As a result of your cooperation with a solution architect, you have an extremely high chance of getting a successful end solution that meets all functional and non-functional requirements right away.

Steps not to lose a fortune developing a web app

The following tips will help you be prepared for web app development so as not to have regrets about outsized expenses you could have avoided.

Step 1. Make sure your specification is clear and understandable. If you outsource your project to an offshore web development team, make sure you have a clear project specification to help your developers prepare a precise estimate, which will allow you to plan your expenses. Any ambiguity will lead to a higher price. A detailed specification allows you to avoid this risk.

Step 2. Create an MVP first and test it. Keep in mind that in certain cases, a landing page can serve as an excellent and inexpensive MVP. Make sure your product will be in demand, and consider all the errors that occur while testing. Only then should you develop a complete solution.

Step 3. Use ready-made solutions when possible. Keep in mind that you don’t have to build all features from scratch, as similar solutions may already exist in the form of community-built libraries or third-party integrations (registration via Facebook or Google, for example). We’ve already mentioned that Ruby on Rails offers many libraries that accelerate web app and website development. ActiveAdmin is one of them. Using ActiveAdmin, developers can enable powerful content management functionality for their web apps.

Step 4. Save on cloud hosting solutions. We use Amazon Web Services as the primary service for hosting web projects we create. AWS offers flexible pricing, with each service priced a la carte. This means you pay only for the services you use. This makes a lot of sense for server infrastructure, as traffic is unpredictable. This is especially true for startups, as it’s hard to say when exactly they’ll attract the first wave of users. That’s why the cloud pricing model suits startups best.

Step 5. Think in advance. When selecting technologies for your web app, think about how you’ll support the app in the long run. Support will be easier if the app has a good architecture and optimized code from the very beginning. Any unsolved problems will appear later on and cause even worse problems. Support and maintenance should be considered when choosing the tech stack, as their consideration will simplify updates even if you decide to change software development service providers.

These are the most widely discussed web application development trends in 2023. Artificial intelligence is gaining ground, as it offers a personalized user experience by gathering users’ data and speeds up interactions with a web application. Another trend that’s worth mentioning is progressive web apps (PWAs), which provide a mobile-like experience and can be easily installed via a shareable link.

Different web apps use different development tools. This is the best evidence that there’s no single most effective technology stack. When choosing a technology stack for web application development, keep in mind the specifics of your project. Solution architects at our outsourcing company will consider your product requirements and turn them into an architecture and design that lay the foundation for a top-notch solution. Our agency can help you with this if you share your web app idea and expectations with us. Tell us what you want to achieve, and our technical experts will gladly suggest the best tools to make it happen.

Want your web project to be a success?

We can make sure it is by selecting the best-fit technology stack.

Explore our servicesFAQ

Why is it important for a business owner to take part in choosing the technology stack for a web project?

Business owners should be in the loop on technologies that are used for their projects in order to stay on the same page as developers and understand what exactly they’re investing in. If you know the specifics of common programming languages and frameworks, your development team won’t have to spend lots of time explaining and justifying their every decision, freeing more time for actual development.

Why is developing an MVP first a reasonable idea?

Beginning with an MVP rather than a full-fledged web application is first of all time- and cost-efficient. You’ll be able to see the first results quickly and at a reasonable cost. By evaluating the first version of your solution, you’ll know what to improve in the next iterations to launch the best web system possible.

How flexible is the web development process if project requirements change?

Businesses don’t stand still, and it may be that during project execution you realize that your project needs changes. It’s best if you know exactly what you need from the get-go. But if any changes are necessary, we surely can modify our development process and adjust it to your new needs. You should remember, however, that such abrupt changes may require additional investment, time, and technical specialists.

Rate this article

3.9/5.0

based on 11 reviews