Software development cannot be assumed to be successful just because the project was completed on time and budget. There’s another factor allowing you to ensure that the released product is safe and functioning properly: the quality of the software.

Starting in 2021, New Jersey-based Virtua Health began COVID-19 vaccinating. However, after patients began to register for appointments through the automatic vaccination scheduling system, the hospital found that between 10,000 and 11,000 visits were duplicated. The system glitch has led Virtua Health to the slowdown in hospital operations.

Of course, after finding the problem, the company fixed the bugs. But this incident can serve as a reminder of the importance of quality assurance for any kind of software. This article discusses approaches to testing as an essential part of business processes and provides several effective quality testing types.

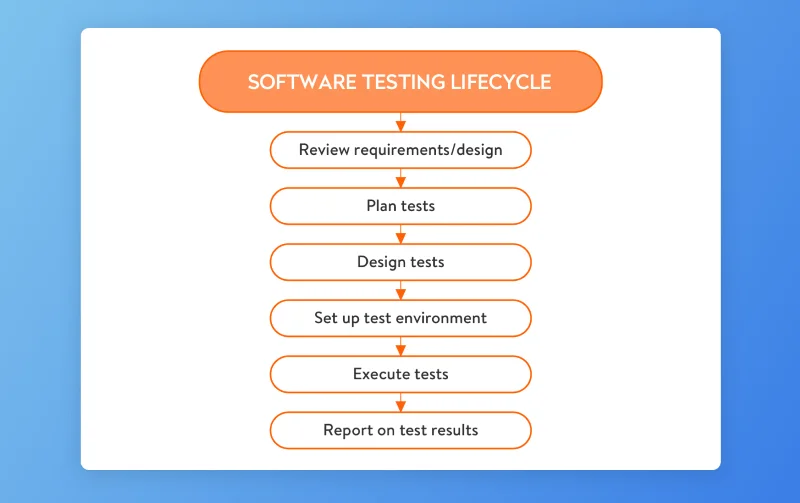

Steps of the quality assurance process

We can divide the testing process into the following stages:

Let’s explore what activities and deliverables each stage involves.

Reviewing requirements

Before starting the development process, you can engage QA specialists. They help identify problems with a product’s business logic, eliminating some potential issues even before the development team creates project documentation. This also reduces development costs, as errors cost less to rectify if detected at an early stage.

Additionally, QA specialists help define and analyze your software features. And at the requirements review stage, QA specialists are already thinking about how each feature will be tested.

Then the development team creates a product requirements specification that describes the status of a software solution under development. Once the specification is ready, designers create wireframes. At this stage, QA specialists ensure the wireframes display all business logic described in the specification. They also check if everything complies with Google’s and/or Apple’s guidelines for mobile apps.

Planning tests

Traditionally, all quality assurance and testing activities are documented in a test plan before the start of a project. QA specialists create a test strategy document that describes:

- test environments (test devices, operating system versions, etc.)

- types of testing that will be run, taking into account the specifics of the project

- criteria for the start and end of testing.

Before the start of each sprint, the responsible QA specialist participates in a sprint planning meeting. At the planning meeting, the team discusses the scope of the sprint and the implementation details. This helps the QA specialist determine the list of features they’ll need to create a checklist for the test design stage. During the meeting, the QA specialist also negotiates delivery dates for builds to run mid-sprint and for regression tests at the end of each sprint.

As a rule, developers and QA specialists don’t create a checklist for the entire project in one go. During development, requirements may change, which makes parts of a comprehensive checklist irrelevant. Instead, you can create checklists on a sprint by sprint basis. This helps eliminate unnecessary work and save your money. Create documentation only when it’s necessary.

Designing tests

After a sprint has been planned and tasks in the sprint scope have been defined, QA specialists can start creating either a checklist or test cases.

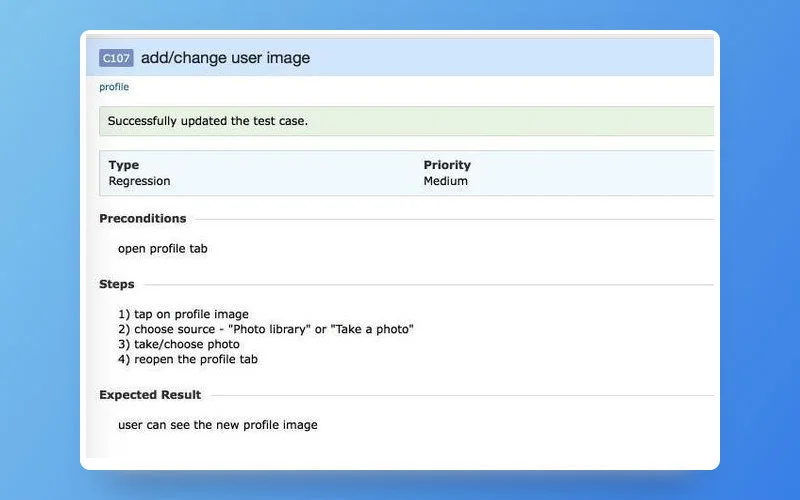

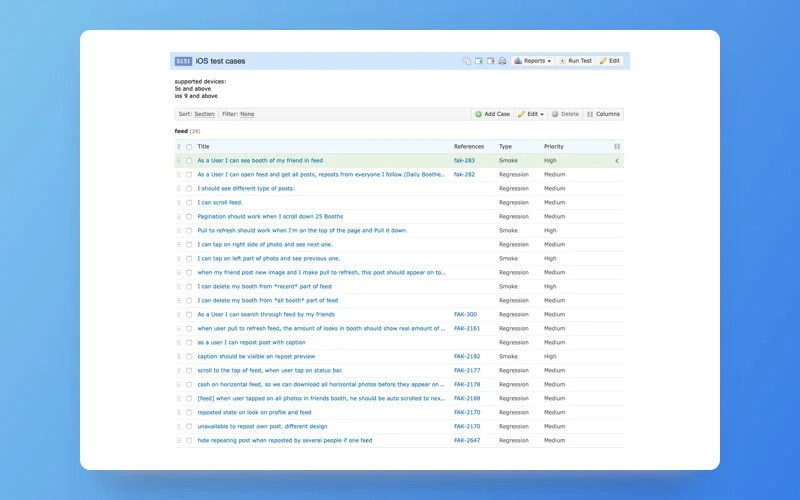

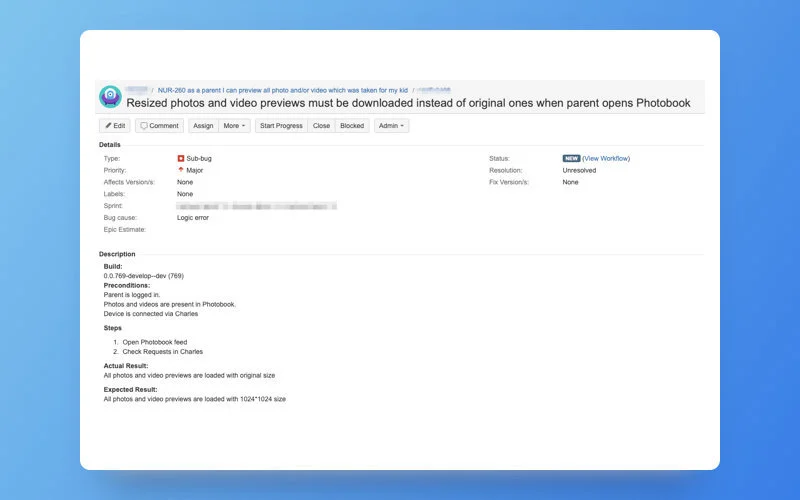

Defining test cases devoted to a particular test scenario is standard practice to verify that an app works as it’s supposed to. Test cases consist of a title, preconditions, steps to be taken, and expected results.

Here’s an example of a test case:

Test cases are effective for complicated test scenarios, but on the other hand, creating test cases is extremely time-consuming. To speed up the development process and cut costs, the team use test checklists instead of test cases to test user flows.

A checklist serves the same purpose as a test case but is less detailed and takes less time to create. You can use checklists for basic user flows. For instance, it’s impractical to create test cases for standard user registration, which usually involves several steps. However, in the course of our experience testing apps, we’ve learned how to use test checklists even for complicated test scenarios. This makes software testing clear and effective.

Example of a checklist:

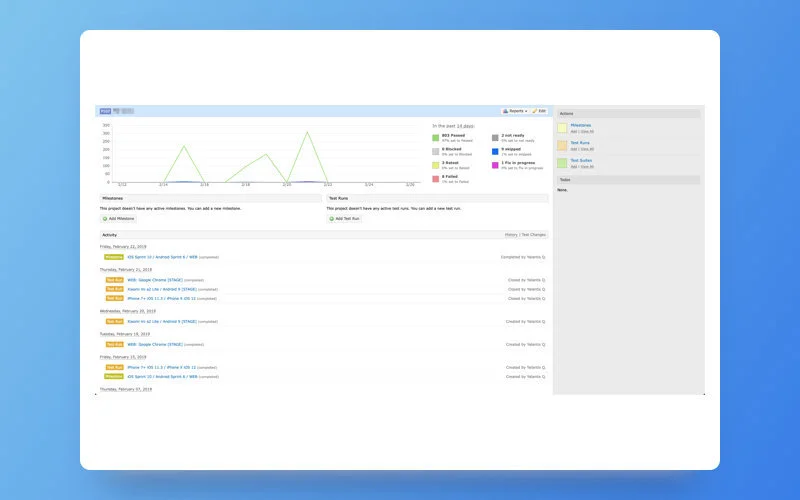

This checklist was created using a tool called TestRail, which stores all information about all tests throughout the entire development process. All testing statistics for the entire period of product development are saved in TestRail. Statistics are available in a simple format:

The QA team keeps checklists up to date throughout product development.

Setting up the test environment

When the checklist or test case is ready and developers have finished their last revisions to a feature, QA specialists need to ensure that the test environment is ready. Preparing a test environment involves:

- preparing a test device with a certain version of an operating system

- installing any necessary applications such as Fake GPS, iTools, and Charles Proxy

- logging in to Facebook and other accounts.

Executing tests

Most commonly, QA experts apply both manual and automated testing to all software we build to ensure the highest code quality. QA professionals test the front end, back end, and ensure API testing of each application.

Read also: Unit Testing for Web Software: Why It’s Necessary and Which Frameworks to Use

Reporting on test results

It’s important to quickly provide feedback about a product’s quality and any defects identified during the software testing process. As soon as a bug is spotted, the QA team can register it in Jira that also serves as a bug tracking system.

All team members have access to Jira, so all bugs and their statuses are visible to all participants in the development process. At the end of testing, statistics for completed software testing and test results can be found in TestRail. When we run automated tests, a report is automatically created and sent to our QA specialists.

After each sprint, we present a build to our client so they can see our progress, assess the quality and convenience of features, and make changes to the requirements if necessary. We also provide release notes showing which features have already been completed and which we’re still working on, along with a list of identified problems that will be fixed soon.

Types of testing

Let’s now explore all types of testing that are commonly used and how their use affects development outcomes.

New feature testing

New feature testing checks if a feature looks and works as expected and ensures that bugs are caught quickly. During this stage, a feature is thoroughly tested. QA specialists test:

- the functionality of the product and how it works

- the product’s appearance

- compliance with UI/UX design guidelines.

This stage of testing is considered complete when all test cases related to a new feature have been passed. During this stage, the feature is tested independently, meaning interactions with other features aren’t tested. You should also make sure the feature works correctly on various devices.

As a project gains functionality, QA specialists need to ensure that all the features work properly together and that new features don’t break existing functionality. To do this, conduct regression testing.

Regression testing

Regression testing checks the current state of the product. This type of testing makes sure that changes to code (new features, bug fixes) don’t break previously implemented functionality. QA specialists should thoroughly test each feature as well as the interactions among features. Code must pass all test cases to be accepted.

Since regression testing is quite time-consuming and expensive, we recommend doing it once every three or four sprints (depending on the project’s size) and before release. Regression testing is usually done before releasing a product, as it helps to define the influence of all changes on the existing product and identify any broken functionality or defects.

Regression testing can be run on one or more devices and can be combined with smoke testing on several devices while one device is used for all test scenarios.

Smoke testing

Smoke testing is a fast and superficial type of testing that checks if a build is stable and if core features are working. Scenarios with the highest priority are checked feature by feature, but not in detail.

Smoke testing takes place after some scope of work has been completed (bug fixes, feature changes, refactoring, server migration). This type of testing can’t replace thorough testing, since issues for alternative scenarios can be missed.

Update testing

Update testing is only provided for software that has been updated. This kind of testing makes sure existing users won’t be adversely affected by a new version of the product – that their data won’t be lost and that both old and new features will work as expected.

Read more in our case study on how we conduct various security testing.

Non-functional testing

QA testers also perform so-called non-functional tests that don’t touch upon an app’s specific features. They carry out the following types of non-functional testing:

Performance testing is conducted to check system performance in terms of sensitivity, accessibility, reactivity, and stability under a specific workload.

Load testing checks the performance of a system when multiple users access it simultaneously.

Compatibility testing examines how a product works on different operating system versions and on different devices.

Condition testing checks a product’s performance during low battery conditions or lack of internet connection.

Compliance checking ensures an app complies with Google’s and/or Apple’s guidelines.

Installation testing checks if an app installs and uninstalls successfully.

Interruption testing shows how a product reacts to interruptions (loss of network connection, notifications, etc.) and checks if it’s able to correctly resume its work.

Localization testing checks that no new bugs have appeared after a product’s content has been translated into another language.

Migration testing ensures that everything works seamlessly after adding new features or changing the technology stack of an already deployed software solution.

Usability testing checks a product’s performance and the intuitiveness of the UI/UX.

All of these types of testing can be performed with or without a checklist. A checklist is simply a framework that helps us stick to the plan and make sure we don’t forget anything. But it’s impossible to document every check we perform; and quite simply, it’s not necessary. Testers use a checklist alongside exploratory testing, making testing quick and flexible. This allows QA specialists to focus on real problems.

Read also: Secure Application Development: From Planning to Production

Testing before release

QA team carefully checks your products before they’re launched. As a rule, we perform regression testing and exploratory testing. Last but not least, we bring in another QA specialist who hasn’t been working on the project for a fresh pair of eyes right before the final release.

Test automation

We also automate the main user flows for long-term projects, which are often regularly exposed to changes and new functionality. For instance, with manual testing only, testers need to constantly take the same steps to ensure new features or code changes haven’t affected other parts of the product.

Instead of repeatedly testing each new feature or code change manually, we create tests that check everything automatically. This saves time and lets QA specialists focus manual testing on complex custom cases.

If you want to ensure test automation, it doesn’t mean you need to automate absolutely everything. See how we decide what tests to automate.

When is automated testing a good idea?

In general, the more often a test is run, the better it is to automate it. Tests that take a long time to complete or those that run at night also should be automated. Approaches to test automation also vary by the type of testing.

Regression testing. It’s appropriate to cover the main features and user scenarios with automated regression tests to make sure there are no critical issues before final product delivery.

Smoke testing. Automated testing helps to get quick feedback on a project’s build status and decide on approaches to further deep testing.

Data-driven testing. Automated tests are really helpful when you need to run one test with different inputs or with a large amount of data.

Performance and load testing. There are simply no manual alternatives to automated performance and load tests.

Tests that don’t need to be automated

User experience and usability tests that require user responses and tests that can’t be totally automated should not be automated. It’s also ineffective to automate tests intended to run only once or as soon as possible.

Tests that don’t need to be automated

User experience and usability tests that require user responses and tests that can’t be totally automated should not be automated. It’s also ineffective to automate tests intended to run only once or as soon as possible.

What types of automated tests do we use?

We use two types of automated tests.

Backend tests. As a rule, QA teams use backend tests for REST API and integration testing and for working with databases. Backend tests allow us to spot bugs in the early stages of development before implemented features are passed to the frontend team. These tests are stable and faster than GUI tests, so we can get quick feedback on the build status. Integration testing makes sure integrated modules work as expected.

GUI and E2E tests for web and mobile platforms (Android and iOS). GUI automation helps in mobile and web app testing through a graphical user interface (GUI). The main advantage of GUI tests is that the app is tested exactly as an end user will use it. We can imitate user actions including clicking, following links, opening pages, and checking app behavior. With the help of end-to-end testing, we can check a business scenario from start to finish and verify the work of the system as a whole.

Typically, manual QA specialists perform tests and create detailed test cases. After that, Automation Testing Quality Control (ATQC) specialist implements scripts selected for automation and sets the time and frequency with which tests will be run. We recommend using CI/CD processes and not running automated tests manually.

Unfortunately, automated tests aren’t always cost-effective, especially for small projects. Automated tests should be written and maintained throughout the entire development process, just like any other code. This can make automated tests quite expensive.

Moreover, experienced QA automation engineers who provide QA consulting are sure that not all kinds of tests can be automated, and for others, it simply doesn’t make sense to automate them. So before creating automated tests, we carefully weigh the pros and cons to decide whether we need them for your project.

If you have any questions about our QA outsourcing and software testing services or full-cycle testing, drop us a line.

Rate this article

5/5.0

based on 1,176 reviews