The number of Internet of Things (IoT) devices worldwide will almost double from 15.1 billion in 2020 to 29 billion in 2030 according to research firm Transforma Insights. These IoT devices include a wide range of items, from medical sensors, smart vehicles, smartphones, fitness trackers, and alarms to everyday appliances like coffee machines and refrigerators.

For IoT manufacturers and solution providers, ensuring that every device functions correctly and can handle increasing loads is a significant challenge. This is where IoT testing steps in. It ensures that IoT devices and systems meet their requirements and deliver expected performance.

In this guide, we explore IoT testing and quality assurance (QA). Specifically, we cover:

- the importance of comprehensive testing for complex IoT systems

- expert insights on creating an end-to-end IoT testing environment

- real-world examples of how companies have succeeded with IoT quality assurance

The vital role of IoT testing

The success of IoT solutions largely relies on thorough testing and quality assurance. IoT testing ensures that devices work together smoothly in real-world situations, even as systems expand. It’s not just about connectivity — testing must guarantee flawless, efficient, and secure functionality despite unexpected challenges.

In fact, rigorous testing is necessary to:

- confirm that functionality, reliability, and performance meet expectations, even as systems scale

- identify security flaws and vulnerabilities that could lead to safety or privacy risks

- build trust in the system among stakeholders and users

IoT systems combine hardware, software, networks, and complex data flows that interact with the physical world. Given their complexity, each component requires thorough testing. Let’s take a closer look.

IoT systems: core components and complexity

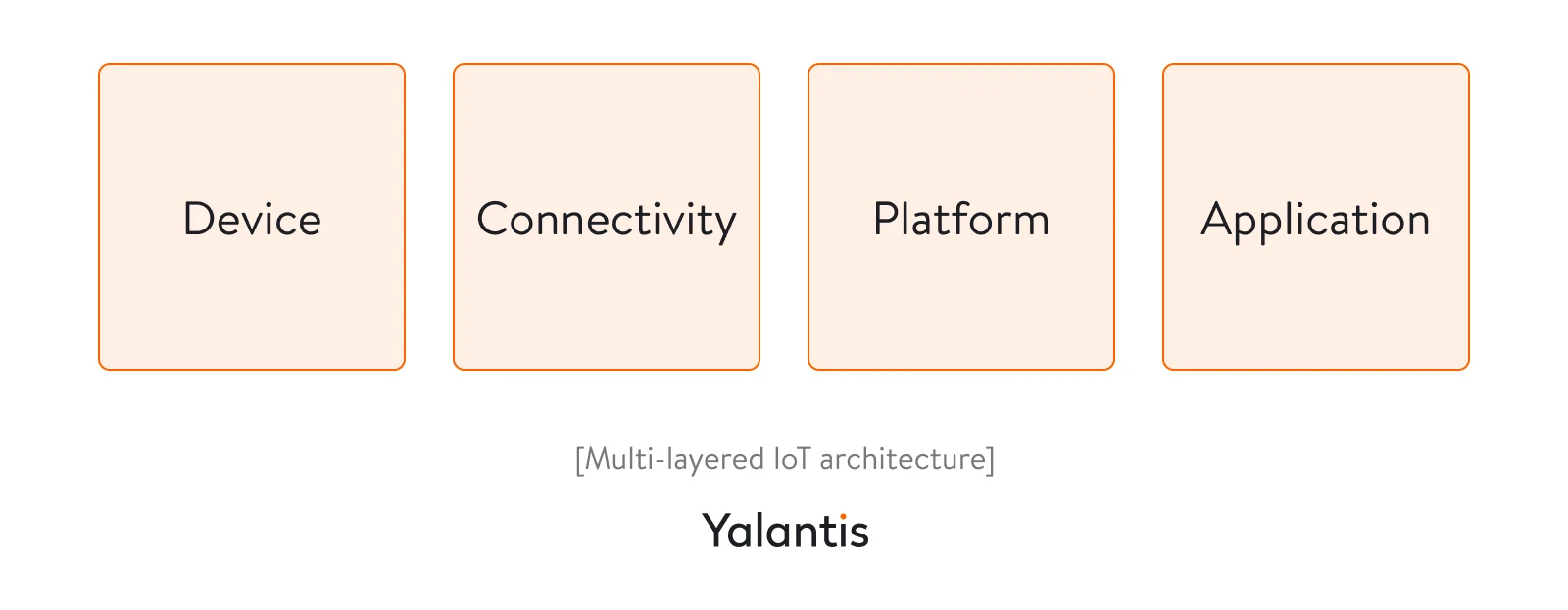

A typical IoT system consists of four main components, each of which should be tested.

- End devices (sensors and other devices that are the things in IoT)

- Specialized IoT gateways, routers, or other devices serving as such (for example, smartphones)

- Data processing centers (may include centralized data storage and analytics systems)

- Additional software applications built on top of gathered data (for example, consumer mobile apps)

Together, these components form a multi-layered architecture that constitutes every IoT solution:

- The things (devices) layer includes physical devices embedded with sensors, actuators, and other necessary hardware.

- The network (connectivity) layer is responsible for secure data transfer between devices and central systems, handling communication protocols, bandwidth, and data security.

- The middleware (platform) layer is the core of the IoT system, providing a centralized hub for data collection, storage, processing, and analytics. Notable middleware platforms include Microsoft Azure IoT, AWS IoT Core, Google Cloud IoT, and IBM Watson IoT.

- The application layer is where users interact with the IoT system. It converts device data into useful insights through user interfaces.

Complexities introduced by multi-layered IoT architecture

Such a multi-layered architecture brings several complexities:

- Diverse devices that require seamless interaction. An IoT system can comprise devices from different manufacturers, each with unique specifications, firmware, and behavior. For example, a smart home system may incorporate lightbulbs from Philips, door locks from Yale, and sensors from Bosch.

- Coexistence of various communication protocols. For instance, a wearable device may use Bluetooth to connect to a smartphone but need a Zigbee home gateway to link to the cloud. Since each protocol has a distinct transmission rate, ensuring seamless device compatibility with all these protocols is essential.

- Real-world integration challenges. IoT systems must seamlessly integrate data from different platforms and devices. For instance, an industrial monitoring system must consolidate data from machines and sensors into a unified dashboard while ensuring a seamless user experience.

- Real-time data processing. Many IoT applications require real-time data processing and large-scale analytics. For instance, a fleet management system must analyze real-time data from thousands of vehicles to optimize performance.

- Adaptation to new standards. As new wireless protocols like Thread or IEEE 802.11ah emerge, IoT ecosystems need to adapt and evolve.

These complexities emphasize the importance of IoT testing, and we’ll now explore various forms of IoT testing in detail.

Crucial types of IoT testing

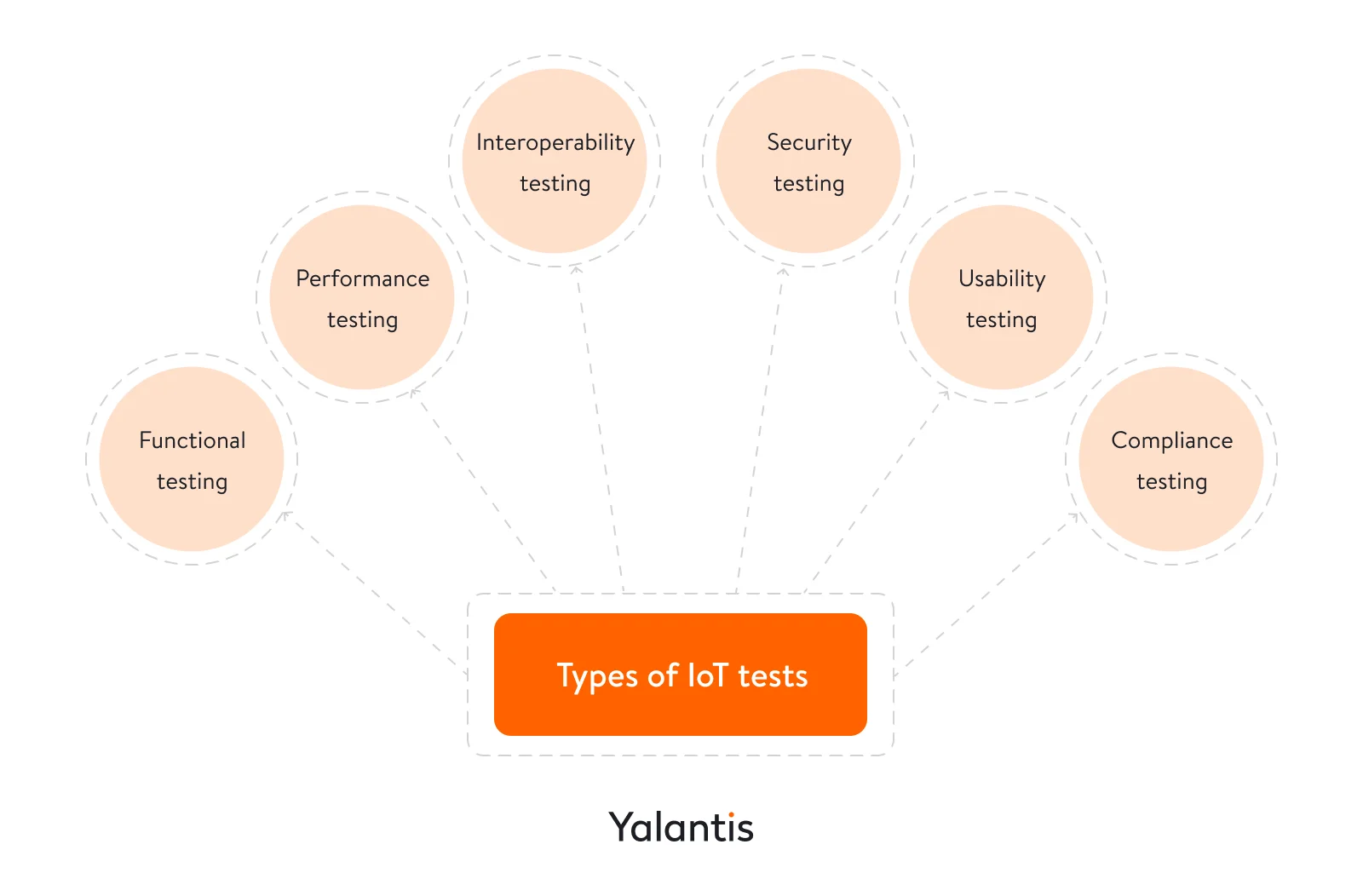

The main types of testing for IoT systems include:

- Functional testing ensures that the IoT solution works as intended by testing device interactions and user interface (UI) performance.

- Performance testing checks responsiveness, stability, and speed under typical and peak loads.

- Interoperability and compatibility testing ensures smooth communication between different devices, platforms, and protocols.

- Security testing identifies vulnerabilities, weaknesses, and threats through data encryption and privacy testing, physical security testing, and other methods.

- Usability testing evaluates real user interactions to enhance the user experience (UX).

- Compliance testing validates adherence to industry standards and government regulations.

- Network testing checks communications protocols like MQTT, Zigbee, and LoRaWAN.

- Resilience testing verifies system reliability under adverse conditions like hardware failures.

Each type of testing has its place in the product life cycle, but we’ll zero in on performance testing. This is vital for IoT systems to ensure they effectively handle real-world data, users, and connectivity demands, staying responsive as demand grows.

Having covered the significance of IoT testing and its various types, it’s time to explore how to actually perform IoT testing.

What does it take to test IoT solutions?

In some sense, IoT testing is no different than testing any web or desktop software application. To spot and reproduce an issue, you need to simulate the scenario in which it occurs on devices found within the IoT ecosystem. Given the variety of IoT devices that may be part of an ecosystem, IoT testing is more difficult than simply creating a script and running it as you would test a mobile app.

To provide IoT product testing services, QA engineers usually spend hours planning and setting up the infrastructure. Then, it takes time to hone the testing process, report on the progress, and analyze the results. Of special concern is the process of building testing standards for end-to-end testing, as QA engineers need to develop auxiliary software solutions for load testing to emulate a large number of specific devices while preserving their essential characteristics.

How can you perform IoT testing?

To make sure an IoT ecosystem works properly, we need to test all of its functional elements and the communication among them. This means that IoT testing covers:

- Functional end device firmware testing

- Communication scenarios between end and edge devices

- Functional testing of data processing centers, including testing of data gathering, aggregation, and analytics capabilities

- End-to-end software solution testing, including full-cycle user experience testing

Approaching IoT testing solutions, service providers should decide on the IoT tests to apply and prepare to combine different quality assurance types and scenarios.

IoT testing involves a full range of quality assurance services. The way engineers test the system depends on its current level of maturity, the assets available to perform testing, and the requirements formed by the product team.

Manual QA for functional testing

When it comes to testing IoT solutions, manual testing plays a crucial role in ensuring that all functional requirements are met. Manual QA engineers perform various tasks in IoT projects, such as:

- setting up functionality to test on real devices as well as emulators or simulators

- running tests on both real devices and emulators or simulators

- balancing tests on real devices with tests on emulators or simulators

Manual functional testing remains essential for IoT projects and is applicable in early development stages and throughout product refinement. However, due to the increasing complexity of IoT ecosystems, automation becomes a necessity for most solutions.

Automating tests for complex IoT systems

Here are some best practices for implementing automated testing in complex IoT systems:

- Select the right tests to automate. Not all tests benefit from automation. Focus first on repetitive tasks that are time-consuming, frequent, and tedious to perform manually.

- Invest in tailored tools. Choose testing platforms designed specifically for IoT protocols, devices, and architectures. Look for capabilities like network emulation and virtual device simulation.

- Modularize for maintainability. Break tests into modular, reusable scripts. This simplifies updating as requirements evolve.

- Simulate real-world conditions. Mimic real-life scenarios like varying network states, unexpected device failures, and different data loads. This builds resilience.

- Integrate into CI/CD pipelines. Include automated testing in continuous integration and deployment workflows. This enables rapid validation of code changes.

- Ensure scalability. As the IoT system expands, tests must scale smoothly, such as through cloud-based parallel testing.

- Regularly maintain tests. Review and update tests frequently as the system changes to keep them relevant.

- Provide detailed failure alerts. Automated tests should instantly alert developers of any failures with logs and descriptions to enable quick diagnosis.

- Go for virtual test environments. Virtual IoT simulations allow for efficient testing without physical devices and infrastructure.

- Incorporate real-world user feedback. Gather user feedback through monitoring and surveys. Prioritize issues based on importance, and focus on demonstrating responsiveness.

Automation is especially valuable in the realm of performance testing. Let’s explore why.

QA automation for performance testing

Automation becomes a requirement as IoT systems scale. This scaling involves more devices generating vast amounts of data, often resulting in system degradation, various issues, and bottlenecks.

To identify and replicate issues related to system performance in scenarios with substantial data generation and sharing, we can use the following types of testing:

- Volume testing. To conduct volume testing, we load the database with large amounts of data and watch how the system operates with it, i.e. aggregates, filters, and searches the data. This type of testing allows you to check the system for crashes and helps to spot any data loss. It’s responsible for checking and preserving data integrity.

- Load testing checks if the system can handle the given load. In terms of an IoT system, various scenarios can be covered depending on the test target. The load may be measured by the number of devices working simultaneously with the centralized data processing logic or by the number of end devices or packets a single gateway handles. The metrics for measuring load include response time and throughput rate.

- Stress testing measures how an IoT system performs when the expected load is exceeded. In performing stress tests, we aim to understand the breaking point of applications, firmware, or hardware resources and define the error rate. Stress testing also helps to detect code inefficiencies such as memory leaks or architectural limitations. With stress testing, QA engineers will understand what it takes for a system to recover from a crash.

- Spike testing verifies how an IoT system performs when the load is suddenly increased.

- Endurance testing checks if the system is able to remain stable and can handle the estimated workload for a long duration. Such tests are aimed at detecting how long the system and its components can operate in intense usage scenarios or without maintenance.

- Scalability testing measures system performance as the number of users and connected devices grows. It helps you understand the limits as traffic, data, and simultaneous operations increase and helps you predict if the system can handle certain loads. If the system breaks, there may be a need to rework its architecture or infrastructure.

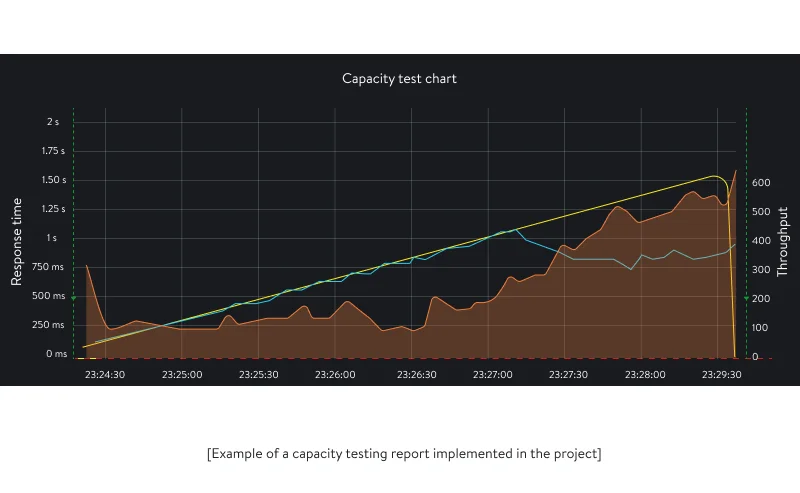

- Capacity testing determines how many users and connected devices an IoT application can handle before either performance or stability becomes unacceptable. Here, our aim is to detect the throughput rate and measure the response time of the system when the number of users and connected devices grows.

Running these types of performance tests becomes crucial at some point in every IoT project’s life. At the same time, checking the performance of a complex IoT system is itself a challenge, as it involves deploying complex infrastructure and programming a number of simulators and virtual devices to mimic an IoT network.

Why we focus on performance testing

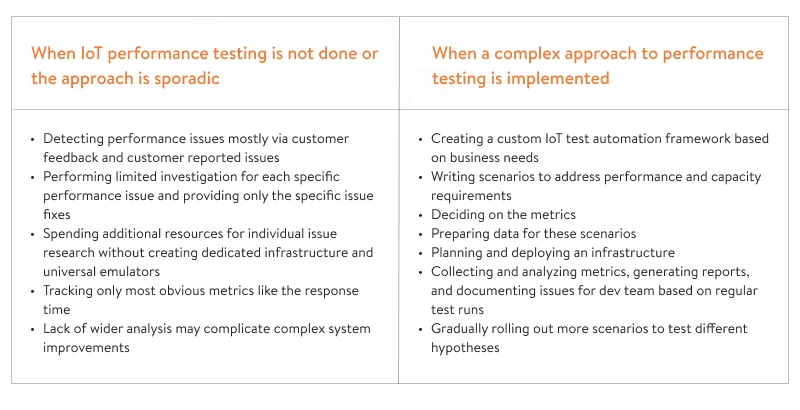

To understand the importance of performance testing for IoT projects, let’s compare what the QA process looks like without performance testing and with a complex approach to testing.

At Yalantis, we provide IoT testing services for projects of different scales and complexities. Among our clients are well-known automotive brands and consumer electronics companies, manufacturers of IoT devices, and vehicle sharing startups. Their experience has proved that performance testing is an essential component of the success of every IoT system.

Read also: How we developed an IoT application for smart home management

Embedding performance testing into the IoT development process requires a systematic approach. To make regular testing a part of your software development lifecycle (SDLC), we advocate implementing an IoT testing framework.

Internet of Things testing framework

An Internet of Things testing framework is a structure that consists of IoT testing tools, scripts, scenarios, rules, and templates needed for ensuring the quality of an IoT system.

An IoT testing framework contains guidelines that describe the process of testing performance with the help of dedicated tools. In a nutshell, implementing a performance testing framework helps mature IoT projects approach QA automation in a complex and systematic way.

Although a framework establishes a system for regular performance measurement and contributes to continuous and timely project delivery, there are cases when it’s optional and cases in which such an approach to quality assurance is a must. The latter include:

- Projects with average loads

- Projects with high peak loads (during specific hours, time-sensitive events, or other periods)

- Rapidly growing projects

- Projects with strict requirements for fault tolerance; critical systems

- Projects sensitive to response time (solutions where decision-making relies on real-time data)

Read also: Ensuring predictive maintenance for large IoT manufacturers

How to implement an Internet of Things testing framework

Quality assurance is an essential part of the SDLC. An IoT testing framework is usually implemented based on the following needs of an IoT service provider:

- Define the current load the system can handle

- Set up and measure the expected level of system performance

- Identify weak points and bottlenecks in the system

- Get human-readable reports

- Automate performance testing and conduct it regularly

A typical flow for the IoT testing process includes four consecutive steps.

- Collect business needs

- Create testing scenarios

- Run performance tests

- Based on the needs specified, address issues and decide on possible improvements

A typical team for performance testing an IoT project should include the following specialists:

- A business analyst who’s in charge of understanding the needs of the IoT business and assisting with defining the scope of usage scenarios as well as the context in which they are applicable, key metrics, and customer priorities

- A solution architect to set up and deploy IoT testing infrastructure that covers necessary scenarios

- A DevOps engineer to streamline the process of IoT development by dealing with complex system components under the hood (for example, implementing or improving container mechanisms and orchestration capabilities for more effective CI/CD)

- A performance analyst (QA automation engineer) who’s responsible for engineering emulators for performance testing, running the tests, and measuring target performance indicators

Depending on the project’s complexity and maturity, the team may be extended by involving a backend engineer, a project manager, and more performance analysts.

What tasks can an IoT testing framework solve?

“What our clients usually lack in their testing strategy is regular assurance and reporting. A performance testing framework can bridge these gaps and is easily aligned with the company’s business processes.”

Alexandra Zhyltsova, Business Analyst at Yalantis

IoT testing framework infrastructure

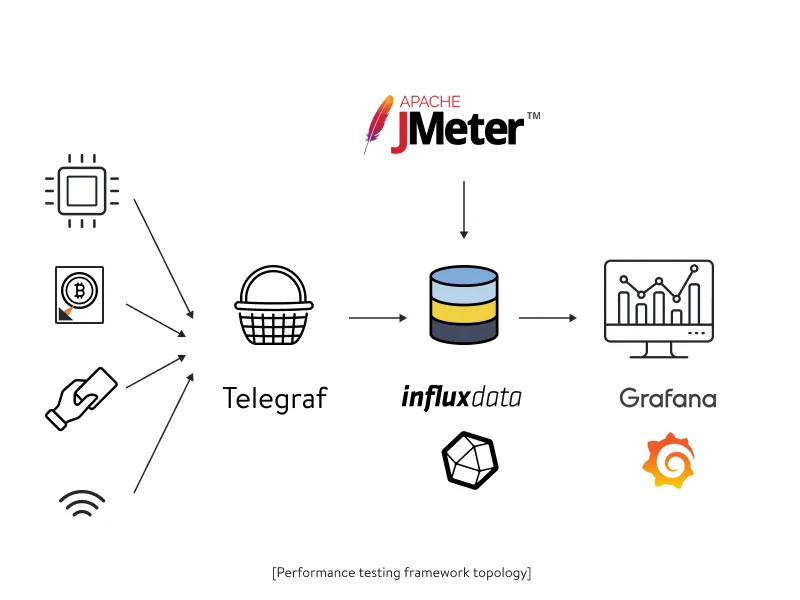

When deciding on the IoT testing infrastructure, a QA team starts by analyzing the given IoT system. Taking into account the specific requirements within the scope of performance testing, they decide on the tools to use and establish communication between them to receive the desired outcome, i.e. a detailed report of performance metrics. Although framework infrastructure differs from case to case, the aim is always to collect, analyze, and present data.

A typical process of IoT testing for performance measurement within the implemented framework includes the following steps:

- Data is collected from resources within the IoT system (devices, sensors).

- A third-party service (Telegraf) is used to collect server-side metrics like CPU temperature and load.

- Collected data is sent to a client-side app capable of analyzing and reporting performance-related metrics (for example, Grafana).

- An Internet of Things testing tool for performance measurement generates the load and collects the necessary metrics (JMeter).

- Analyzed data from both Telegraf and JMeter is retrieved and stored in a dedicated time-series database (InfluxDB).

- Data is presented using a data visualization tool (Grafana and built-in JMeter reports)

When is the right time to implement an IoT testing framework?

“Of course, when the product is at the MVP stage, deploying a full-scale performance testing infrastructure is not something IoT providers should invest in. When the product is mature enough, a need to standardize the approach to testing appears.”

Alexandra Zhyltsova, Business Analyst at Yalantis

“The optimal time to get started with performance testing is two to three months before the software goes to full-scale production (alpha). However, you should make it a part of your IoT development strategy and put it on your agenda when you’re approaching the development phase. At the same time, there is no good or bad time to implement a performance testing framework. You achieve different aims with it at different stages.”

Artur Shevchenko, Head of QA Department at Yalantis

Below, we highlight some real cases where implementing a QA testing framework helped our clients prevent and solve various performance issues at different stages of the product life cycle.

You may also be interested in our expert article about IoT analytics.

Testing IoT: use cases

For this section, we’ve selected stories of three different clients that benefited from ramping up their strategy by using IoT testing services. Let’s overview how implementing an IoT testing framework worked for them.

RAK Wireless

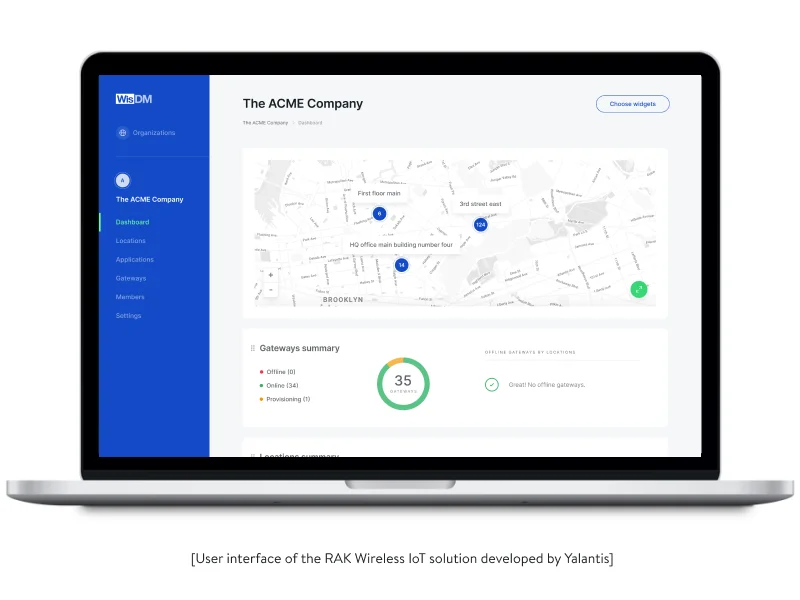

RAK Wireless is an enterprise company that provides a SaaS platform for remote fleet management. The product deals with the setup and management of large IoT networks, for which performance is crucial.

Initial context. We’ve been collaborating with RAK Wireless for two years. During this time, we’ve gradually moved from situational testing to implementing a complex performance testing approach.

When the product was in private beta, the number of system users was limited, and there was no need to get started with performance testing. In the course of development, moving to public beta and then to an alpha release, we focused on estimating the system load and understood that we were expecting an increase in the number of users and devices connected to the IoT network.

The solution’s main target customers are enterprises and managed service providers handling tens and hundreds of different IoT networks simultaneously. For such customers, performance is one of the key product requirements.

With more customers starting to use the product, the number of IoT devices was growing as well. To prevent any issues that could appear, the client considered changing the approach to IoT testing from ad hoc to a more complex one.

Challenges. The product handles the full IoT network setup process, from connecting end devices to onboarding gateways and monitoring full network operability.

- The first challenge was to carefully indicate the testing targets and plan test scenario coverage. We chose a combination of single-operation scenarios and complex usage flow scenarios to define the metrics to collect at each step.

- Once test coverage was planned, another challenge was to properly emulate a number of proprietary IoT devices of different types to make sure we were using optimal infrastructure resources for a given scenario. We ended up creating several different types of emulators. For some scenarios, a data-sending emulation was enough; others required emulating full device firmware to replicate entire virtual devices.

What we achieved with the performance testing framework. We aimed to stay ahead of the curve and detect performance issues before any product updates were released. Implementing the testing framework allowed the product team to take preventive actions and plan cloud infrastructure costs. Transparent reporting and optimal measurement points allowed us to quickly report detected issues to the proper engineering team in charge of testing firmware, cloud infrastructure, or web platform management.

Automating performance testing allowed us to achieve coherence and ensure regular compliance system checks. A long-term performance optimization strategy helped us achieve and preserve the desired response time, balance system load by verifying connectivity with device data endpoints, and ensure system reliability under normal and peak loads.

Toyota

Initial context. Our client is a member of Toyota Tsusho, a corporation focused on digital solutions development and a member of the Toyota Group.

One of the products we helped our client develop was an end-to-end B2B solution for fleet management. Vehicles were equipped with smart sensors that connected to a system to send telemetrics data using the TCP protocol.

Some of the features provided by the IoT solution included:

- Building and tracking routes for drivers

- Tracking vehicle exploitation details

- Providing real-time assistance to drivers along the route

- Providing ignition control

Challenges. Taking into account the scale of the business, the client needed to maintain a system that comprised about 1,000 end devices working flawlessly in real time.

Among the tasks we aimed to solve with the testing framework were:

- Ensuring the delay in system response to data received from devices didn’t exceed two seconds

- Preventing any breakdowns related to the performance of sensors tracking geolocation, vehicle mileage, and fuel consumption

- Establishing a reporting system that would allow for processing and storing up to 2.5 billion records over three months

What we achieved with the performance testing framework. We created a simulator to simultaneously run performance tests on the maximum number of end devices.

The framework included capacity testing and allowed results to be represented by providing useful reports that display a specified number of records.

Running volume tests helped us spot product infrastructure bottlenecks and suggest infrastructure improvements.

Miko 3

Initial context. Our client is a startup that produces hardware and software for kids, engaging them to learn and play with Miko, a custom AI-powered robot running on the Android operating system. We were dealing with the third version of the product, which quickly became extremely successful. The client was doing some manual and automated testing to detect and fix performance issues. But the lack of a systematic approach to quality assurance resulted in system breakdowns during periods of peak load.

Challenges. Issues with performance appeared after the company sold a number of robots during the winter holidays. When too many devices were connected to the network, customers started to report various issues that prevented their kids from playing with the robots. It became obvious that the system was not able to handle the load.

The client understood that they needed to adjust and expand their performance testing strategy, as the product was scaling and the load was only expected to grow further.

What we achieved with a performance testing framework. We aimed to establish a steady process of load testing for both the main use cases (such as voice recognition for Miko 3) and typical maintenance flows (such as over-the-air firmware updates).

Implementing the performance testing framework allowed us to build a system capable of collecting and analyzing detailed data about the system’s performance. Having this information, we managed to define the system’s limitations and detect bottlenecks in the architecture design as well as plan architectural optimizations.

Such an approach allowed us to systematically predict system load by measuring response times and throughput rates and analyzing error messages. A stable reporting process helped to distribute responsibility among the IoT testing team and optimize development costs.

Wrapping up

In a mature multi-component IoT ecosystem, performance testing is as crucial and technically demanding as end-to-end IoT testing.

The efforts invested in setting up a comprehensive testing framework with the required IoT application testing tools will pay off in detecting performance issues and improving the user experience before you get negative feedback from customers.

Such an approach to quality assurance brings transparency to system resource use and bottlenecks, allows you to estimate the system’s effective load capacity and scalability potential, helps you optimize infrastructure and costs, and supports decisions about improving the solution architecture.

Want to approach IoT performance testing in a holistic way?

We’ll show you how

FAQ

What is the main challenge of IoT device testing?

The main challenge of testing IoT devices and gateways is their diversity. For the majority of reasons, QA engineers would need to emulate them. This takes time and requires the involvement of a highly skilled IoT development team.

What is the most cumbersome part of IoT testing and what corresponding measures to take?

The most cumbersome part of IoT testing is related to testing edge devices and gateways. In fact, for testing edge devices and IoT gateways, QA engineers perform the same test types they would do for traditional software testing. To test the network level (gateways) you would usually need functional, security, performance, and connectivity tests. To perform QA of edge devices, functional, security, and usability tests are required. Additionally, there is a need to run compatibility tests.

What is an Internet of Things testing framework?

An IoT testing framework is a structure that comprises IoT testing tools, scripts, scenarios, rules, and templates required for providing the quality of an IoT system.

Rate this article

5/5.0

based on 1,123 reviews