Big data software testing services

Boost the ROI of your big data systems by ensuring their stability and reliability with comprehensive

big data testing. Identify and eliminate performance bottlenecks, data quality issues,

and integration problems to increase processing speed, reduce pipeline downtime,

and maintain security and compliance.

Big data testing services Yalantis provides

-

Performance and scalability testing

- Auditing big data systems’ performance and scalability

- Simulating data processing scenarios based on real-world use cases

- Measuring and monitoring latency, throughput, and response time

- Identifying and implementing the most suitable performance optimizations

- Evaluating and optimizing resource utilization

- Stress testing to ensure system stability under peak loads

-

ETL/ELT testing

- Evaluating and validating ETL/ELT processes and pipelines

- Assessing and optimizing data quality verification

- Performing source-to-target validation

- Identifying data quality issues (data loss, corruption, inconsistency, duplication, etc.)

- Automating data validation processes

- Implementing incremental data load verification

-

Security and compliance testing

- Evaluating security and access controls against regulatory requirements

- Identifying compliance gaps in data security and privacy measures

- Verifying data storage security with penetration testing

- Pinpointing security risks in user authentication and authorization protocols

- Performing comprehensive vulnerability assessments for big data applications

-

Real-time data processing testing

-

Integration testing

- Ensuring seamless, uninterrupted data flow across internal and external data sources

- Testing compatibility across APIs, third-party services, and enterprise systems

- Validating data integrity and quality across the integrated tools

- Assessing integration performance and behavior under varying data volumes

-

Big data quality assurance

- Assessing data consistency, accuracy, and completeness

- Setting up data profiling and cleansing automation

- Implementing deduplication and validation processes

- Resolving data quality issues revealed during data quality testing

- Defining data quality metrics based on business requirements

- Implementing data quality monitoring and continuous improvement

-

Data governance framework

- Developing documentation on data management policies and standards

- Establishing or improving data ownership, access controls, and accountability policies

- Designing or optimizing data cataloguing and classification workflows

- Performing governance audits to ensure internal compliance with the framework

- Improving data visibility and controls with metadata management

Technologies Yalantis works with

-

Great Expectations

-

Deequ

-

Apache Airflow

-

Apache JMeter

-

Kafka

-

PyTes

-

Spark

-

Testcontainers

Industry-specific use cases Yalantis specializes in

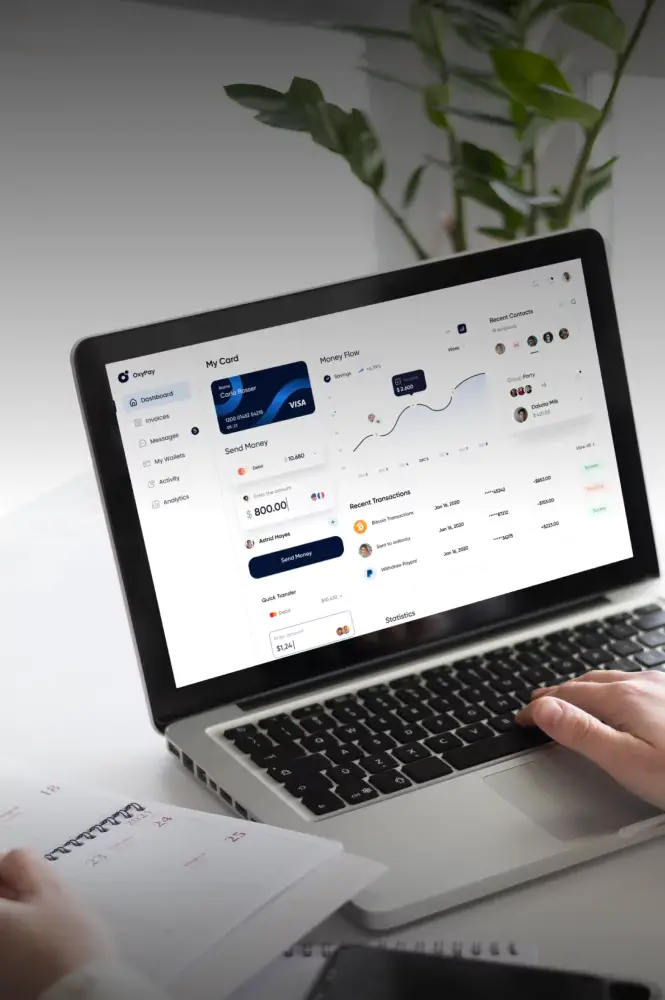

Finance

Keep your fraud detection and risk assessment accurate, maintain compliance, and ensure long-term scalability with our big data analytics testing.

Healthcare

Check your systems’ interoperability with big data platforms, validate the reliability of your predictive models, and verify HIPAA compliance.

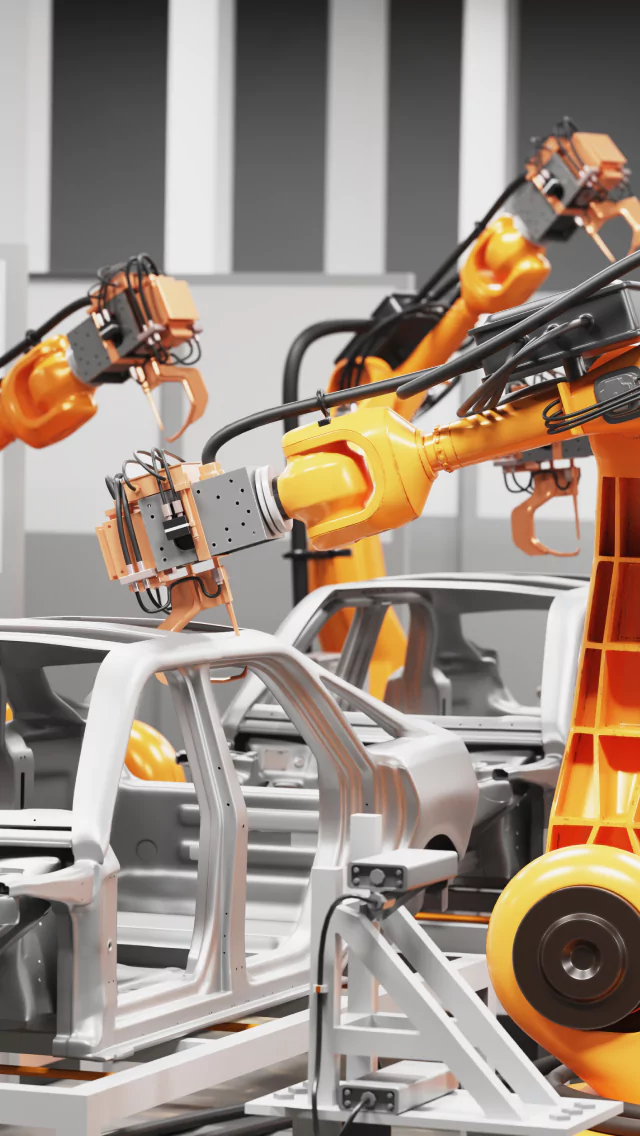

Manufacturing

Keep your big data insights accurate and your predictive analytics systems scalable with a big data testing solution that supports IoT data.

Our clients’ reviews

Results our big data testing services deliver

-

Reduced downtime

Prevent system interruptions and ensure business continuity with big data analytics testing services that ensure your systems remain operational under peak data loads.

-

Increased data processing speed

Maintain consistently high performance even when your data processing tasks involve high-velocity, vast, and complex datasets.

-

Improved data quality

Instill confidence in your analytics with accurate data that adheres to pre-defined business rules by testing your big data for quality, integrity, and consistency.

-

Lower TCO

Optimize resource utilization and reduce data quality assurance costs with test automation tools and effective processes set up by big data experts.

Insights into our big data expertise

An enterprise data governance framework: Solve your biggest challenges with data security, quality, compliance

Is data chaos costing your business growth and innovation? Discover how to tackle data challenges around security, consistency, quality, and compliance with a single solution.

Choosing the best database solution for your project

We’ve compared the most common database management systems and are ready to share our recommendations. Find out which database best fits your software solution.

How to Ensure Efficient Real-Time Big Data Analytics

Find out key stages of handling real-time big data efficiently, learn what tools and technologies to use to streamline this process.

FAQ

What business risks can big data testing help prevent?

Effective big data testing helps companies mitigate reputational, operational, compliance, and security risks by ensuring high data quality, visibility, traceability, and reliability. Thoroughly testing big data solutions can also help overcome challenges such as inconsistent user experiences and infrastructure cost overruns.

Can you help us reduce infrastructure costs through testing?

Yes. With our service offering, we can help you reduce infrastructure costs by identifying and eliminating redundant data processing, inefficient resource utilization, and performance bottlenecks. We can also help you optimize costs via efficient batch processing scheduling, reduced error rates, and data deduplication.

How do you ensure compliance during your big data testing?

First, we identify all applicable standards, laws, and regulations based on your industry and jurisdiction. We then adapt our testing methodology to the identified requirements, which can involve data minimization and comprehensive reporting. We can also consult you on maintaining compliance throughout the entire data lifecycle, including your testing processes.

How do you handle scalability issues during big data testing services?

Our team uses automation testing tools like Apache JMeter to simulate large volumes of structured and unstructured data during our big data application testing processes. If our testing reveals scalability issues, our big data engineering specialists can enhance your solution’s throughput and latency by implementing techniques such as parallel processing.

Contact us

Tell Us About Your Project & Get Expert Advice

got it!

Keep an eye on your inbox. We’ll be in touch shortly

Meanwhile, you can explore our hottest case studies and read

client feedback on Clutch.

Lisa Panchenko

Senior Engagement Manager

Your steps with Yalantis

-

Schedule a call

-

We collect your requirements

-

We offer a solution

-

We succeed together!